Queue ValueTaskAwaiter IAsyncStateMachineBox directly to ThreadPool by benaadams · Pull Request #21159 · dotnet/coreclr (original) (raw)

This repository was archived by the owner on Jan 23, 2023. It is now read-only.

Conversation47 Commits11 Checks0 Files changed

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.Learn more about bidirectional Unicode characters

[ Show hidden characters]({{ revealButtonHref }})

#20308 introduced some ExecutionContext faster paths when executing from the ThreadPool. However when running ASP.NET Core (stripped Platform); these paths are not used as the ValueTask continuation is provided to Pipelines as a Action<object>, object pair.

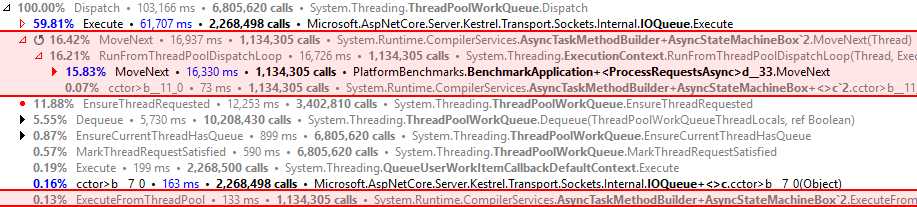

This leads to the allocation of a QueueUserWorkItemCallbackDefaultContext<TState> per ThreadPool queue and a more involved execution path:

We can recognise the callback for a ValueTaskAwaiter's AsyncStateMachineBox and instead queue directly to the ThreadPool both skipping the additional allocation and using the faster ExecutionContext path:

Resolves https://github.com/dotnet/coreclr/issues/20200

Thanks, but I don't like this direction. Every single queued work item is now paying for this, and whether that branch ends up being measurable or not, I don't think we should be tying the ThreadPool's implementation to a specific implementation detail of ValueTaskAwaiter and how it's used by a particular upstream component.

the only other clean way to solve this problem would be to expose the state machine as something that can be directly queued to the thread pool and expose something that the IValueTaskSource can implement (I proposed something similar on the issue) that can flow it so we can avoid the allocation.

to a specific implementation detail of ValueTaskAwaiter

Covers the four types as they all use the same callback:

ValueTaskAwaiterValueTaskAwaiter<TResult>ConfiguredValueTaskAwaitable.ConfiguredValueTaskAwaiterConfiguredValueTaskAwaitable<TResult>.ConfiguredValueTaskAwaiter

(though am sure you are aware 😄)

the only other clean

I don't think this way is clean :)

this problem

How much does this change alone reliably move the needle on plaintext throughput?

solve

As another approach, can't only one callback be happening at the same time on a given pipe reader/writer? If so, why not have the callback/state provided by OnCompleted be stored into the instance and have that instance be an IThreadPoolWorkItem that can be queued. Doesn't that also address the problem, while also handling any other cases where the pipe might want to queue something?

Yes that’s an option I’m exploring as well. It’s not clean either because we need to detect when the scheduler is the threadpool scheduler and queue the work item directly instead of using the scheduler (or introduce another abstraction and interface dispatch).

Could also remove the constraint onUnsafeQueueUserWorkItem(IThreadPoolWorkItem callBack, bool preferLocal) that throws for Task (instead checking the Task state is WaitingForActivation) then the caller could check if the state is IThreadPoolWorkItem and use that Queue method.

But I'd assume that's a leaky abstraction too horrible to contemplate :)

But I'd assume that's a leaky abstraction too horrible to contemplate :)

Yes. It also re-introduces all of the problems I was concerned about with regards to being able to queue Task objects.

My strong preference is for this to be handled via e.g. the pipe object containing the callback/state and implementing IThreadPoolWorkItem (assuming it actually needs to be handled). That's a key example of what the interface was added for. I get that it'll require a check to determine whether the PipeScheduler represents the ThreadPool, but I'd rather a check be done there rather than in QueueUserWorkItem itself. Doing so at that level avoids a virtual dispatch, but more importantly, I believe it's a cleaner approach because it's much more natural for Pipe to know about PipeScheduler than it is for ThreadPool to know about ValueTaskAwaiter.

It’s no different IMO you could invert that dependency by declaring the sentinel delegate a thread pool intrinsic and have the ValueTaskAwaiter use that instead of its own delegate.

Doing so at that level avoids a virtual dispatch

Also introduces an interface dispatch (IThreadPoolWorkItem.Execute vs Task.ExecuteFromThreadPool) and maintains a second interface cast+dispatch (is IAsyncStateMachineBox box).MoveNext() in System.Runtime.CompilerServices.ValueTaskAwaiter+<>c.cctor>b__11_1 which gets dropped.

I believe it's a cleaner approach because it's much more natural for Pipe to know about PipeScheduler than it is for ThreadPool to know about ValueTaskAwaiter.

This way its an implementation detail that's hidden at the same level in the runtime, and doesn't require users to wrap OnCompleted(Action<object> continuation, object state, short token, ValueTaskSourceOnCompletedFlags flags) in a IThreadPoolWorkItem

Although do still have to do special handling anyway for FlowExecutionContext == true.

you could invert that dependency by declaring the sentinel delegate a thread pool intrinsic and have the ValueTaskAwaiter use that instead of its own delegate.

cf79ed5 ?

Also introduces an interface dispatch (IThreadPoolWorkItem.Execute vs Task.ExecuteFromThreadPool)

That's not an extra dispatch, it just replaces one that would be virtual with one that's interface.

and doesn't require users to wrap

"Users" being the pipelines implementation itself? That's a pretty advanced "user". And in special casing at this level it misses out on optimizations that would apply automatically at the higher level. For example, AsTask doesn't benefit from the change at this level, but would if done at the higher level.

And doing it here affects every single unrelated call to QueueUserWorkItem, of which we expect there to be many. At the pipelines level, basically everything is relevant.

at the same level in the runtime

I don't see it as being "the same level" in the runtime. It's an unfortunate coupling from my perspective.

Doing so at that level avoids a virtual dispatch

But you're right that we still overall end up with the same number of dispatches. Either the pipes code does an UnsafeQueueUserWorkItem(IThreadPoolWorkItem), where that ITPWI.Execute (first dispatch) will invoke IAsyncStateMachineBox (second dispatch), or the pipes code does a PipeScheduler.Execute (first dispatch) which will use this approach to invoke Task.ExecuteFromThreadPool (second dispatch).

Moved to UnsafeQueueUserWorkItem since that's now public and doesn't flow context.

"Users" being the pipelines implementation itself? That's a pretty advanced "user".

This might be true 😄

Would also flow through ManualResetValueTaskSourceCore<TResult> when RunContinuationsAsynchronously so IAsyncEnumerables too? 🤔

I can buy the cost thing (if It isn’t negligible) but the BCL does this sorta thing all over. I’m not sure why this crosses the line. We use internal guts of things all over the place so it’s hard to undeterstand (for me personally) when these hacks go too far.

Either the pipes code does an ...

It might also gain from changing PipeCompletion to an object rather than a large struct; but also its kinda messy as the IThreadPoolWorkItem would need to be implemented twice once for reading and once for writing which would require two types. (Though could always inherit one type to two empty types for that)

@benaadams Ideally, we would try to reuse the allocation we already have (the PipeReader and PipeWriter objects but those today are just wrappers over the pipe so they would need to be refactored.

Aside: there are two main sources of ThreadPool Queues, Pipelines and IOQueue.

IOQueue is definitely a IThreadPoolWorkItem scenario as its doing its own thing; and I have PR for ASP.NET Core to move to that for when their repo merge is complete.

Pipelines is a more general IValueTaskSource type thing; which seems like a more common pattern that could benefit.

A alternative approach to wrapping Pipelines in IThreadPoolWorkItem would be to re-platform Pipelines on ManualResetValueTaskSourceCore; and do the special casing in MRVTSC but I don't think that would work very easily as it is both .NET Standard and has a different shape of scheduler.

The advantage of using IThreadPoolWorkItem in Pipelines is it would work for Default and non-Default contexts, and it would be less #ifdefy than MRVTSC.

The advantage of this PR's approach is it just works with no code changes to Pipelines other than using the new generic UnsafeQueueUserWorkItem overload; which was a desired change anyway https://github.com/dotnet/corefx/issues/32924.

The disadvantage is it doesn't handle the non-Default context case, so allocations would still occur then (unlike IThreadPoolWorkItem).

@dotnet-bot test Ubuntu x64 Checked Innerloop Build and Test (Jit - TieredCompilation=0)

Just because we've transgressed in the past and coupled things that shouldn't have been doesn't mean we should do it more. I like this better than I did before the delegate being owned by the thread pool, but I still don't love that the thread pool now needs to know about IAsyncStateMachineBox nor that it needs to check the callback every time anything is queued, regardless for whether it's related or not. Until very recently, ThreadPool didn't even know about Task, and that was only necessary to enable the public IThreadPoolWorkItem, the primary motivation of which was to provide an extensibility mechanism to avoid exactly this kind of dependency. And this doesn't help with AsTask, whereas checking in pipelines would.

That said, I'll noodle on it more while I'm away from my computer for the holiday.

Please separate out the unrelated MRVTSC change into its own PR. But note that it'll be very rare for the context to be non-null... so I'm not convinced it's worth adding the default check and increasing the amount of code. That would only be hit if someone called ValueTask.GetAwaiter().OnCompleted directly.

@dotnet-bot test OSX10.12 x64 Checked Innerloop Build and Test

And this doesn't help with AsTask

Turned ValueTaskSourceAsTask into an IAsyncStateMachineBox on Core so they can use same delegate and .AsTask also can take advantage of this change

To my earlier question:

"How much does this change alone reliably move the needle on plaintext throughput?"

Any success getting such numbers?

But that hurts the case where the continuation isn't queued, e.g. ReadAsync().AsTask() on an unbounded channel that allows synchronous continuations, increasing the size of the resulting task by ~10% without commensurate throughput gains.

This isn't the default though right?

This isn't the default though right?

Not for channels. It is for sockets, e.g. socket.ReceiveAsync(...).AsTask().

Turned ValueTaskSourceAsTask into an IAsyncStateMachineBox on Core so they can use same delegate and .AsTask also can take advantage of this change

But that hurts the case where the continuation isn't queued, e.g. ReadAsync().AsTask() on an unbounded channel that allows synchronous continuations, increasing the size of the resulting task by ~10% without commensurate throughput gains.

Can shrink it by ~10%; by reverting, but then .AsTask() doesn't take advantage of the change and instead pays a reference equality check if it is queued to the ThreadPool (which should be a fast check). Just pointing out it could take advantage of it if its a common path?

The reference check also won't regress existing consumers of UnsafeQueueUserWorkItem<TState>(Action<TState> callBack, TState state, bool preferLocal) as their currently aren't any as the api isn't exposed (yet).

However anything new that either does naive queuing of IValueTaskSource continuations or is based on ManualResetValueTaskSourceCore will automatically take advantage of it.

Pushing more things down the IThreadPoolWorkItem route (which is very valuable in other circumstances); will increase the polymorphism of the interface dispatch stub as well as being more complex and requiring an object to implement IThreadPoolWorkItem to be queued.

Whereas this is providing a ThreadPool.UnsafeQueueUserWorkItemInternal(box, preferLocal: false) entry point without needing to formalize or expose any implementation details like IStateMachineBoxAwareAwaiter and IAsyncStateMachineBox.

e.g. currently could you write a non-allocating YieldAwaitable outside of coreclr; aside from pooling IThreadPoolWorkItems to resuse?

With this change it would for await MyYielder.Yield() below (on Default EC, no scheduler)

public static class MyYielder { private readonly static Yielder Default = new Yielder();

public static ValueTask Yield() => new ValueTask(Default, default);

private class Yielder : IValueTaskSource

{

ValueTaskSourceStatus GetStatus(short token) => ValueTaskSourceStatus.Succeeded;

void OnCompleted(Action<object> continuation, object state, short token, ValueTaskSourceOnCompletedFlags flags)

{

bool flowContext = (flags & ValueTaskSourceOnCompletedFlags.FlowExecutionContext) != 0;

if ((flags & ValueTaskSourceOnCompletedFlags.UseSchedulingContext) != 0)

{

Schedule(continuation, state, flowContext);

}

else if (flowContext)

{

ThreadPool.QueueUserWorkItem(continuation, state, preferLocal: false);

}

else

{

// Non-allocating

ThreadPool.UnsafeQueueUserWorkItem(continuation, state, preferLocal: false);

}

}

void Schedule(Action<object> continuation, object state, bool flowContext)

{

// ...

}

void GetResult(short token) { }

}}

"How much does this change alone reliably move the needle on plaintext throughput?"

Will get back to you :)

For the non-pipelined json it does have the desired reduction in allocations:

The second remaining biggest allocator QUWIDC (the Json object is required by the rules); will disappear when the ASP.NET build process stabilises for 3.0 and the IOQueue can move to be a IThreadPoolWorkItem; since its just constantly reused.

Raw performance is more tricky to measure until dotnet/corefx#33658 is merged; as I have to put it in the non-unsafe path to make use of it; which then also effects the other threadpool queueing.

| // internally we call UnsafeQueueUserWorkItemInternal directly for Tasks. |

|---|

| if (ReferenceEquals(callBack, ThreadPoolGlobals.s_invokeAsyncStateMachineBox)) |

| { |

| if (!(state is IAsyncStateMachineBox)) |

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Debug.Assert this?

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As its two fields, could be stored in a struct and be submitted during a torn write to that struct (or someone could wrongly cache the callback, but update the state).

The callback itself does the type check; but here we are bypassing the callback and running directly in the threadpool when if the state isn't a Task it will do an unsafe cast: Unsafe.As<IThreadPoolWorkItem>(workItem).Execute(); so we can't let the dodgy state go down this path and get onto the ThreadPool.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

i.e. it wouldn't just be exception bad; but would be type system violation bad

| @@ -37,6 +37,20 @@ internal static class ThreadPoolGlobals |

|---|

| public static bool enableWorkerTracking; |

| public static readonly ThreadPoolWorkQueue workQueue = new ThreadPoolWorkQueue(); |

| #if CORECLR |

| /// |

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: this comment was wrong before and is still wrong in the new location :) Copy and paste error on my part. It should be "IAsyncStateMachineBox.MoveNext", not "ITaskCompletionAction.Invoke".

| @@ -1333,6 +1347,25 @@ public static bool UnsafeQueueUserWorkItem(Action callBack, TSta |

|---|

| ThrowHelper.ThrowArgumentNullException(ExceptionArgument.callBack); |

| } |

| #if CORECLR |

| // If the callback is the runtime provided invocation of an IAsyncStateMachineBox, |

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: runtime provided => runtime-provided

| @@ -37,6 +37,20 @@ internal static class ThreadPoolGlobals |

|---|

| public static bool enableWorkerTracking; |

| public static readonly ThreadPoolWorkQueue workQueue = new ThreadPoolWorkQueue(); |

| #if CORECLR |

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are these new "#if CORECLR"s needed? ThreadPool.cs is not currently shared.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wasn't sure if they were needed for Mono and if having references to IAsyncStateMachineBox at the shared src level was problematic. Will remove.

Thank you for the additional details.

I would still like to see what kind of impact this has on throughput, but while I still don't like that ThreadPool now has to know about IAsyncStateMachineBox, I agree this adds more benefit than it costs and we should do it. I would like to subsequently see if we could remove that dependency, though, e.g. by minimizing the number of places we use IAsyncStateMachineBox and instead referring to it in various places, in particular here, as a Task. That would also have the small benefit of being able to invoke it via a virtual rather than interface dispatch.

But that's not for this PR. Let's just address the nits and consider this done.

The ThreadPool only uses IAsyncStateMachineBox for the type check in UnsafeQueueUserWorkItem; it then runs it as a Task in ThreadPoolWorkQueue.Dispatch via ExecuteFromThreadPool.

This also enables it to skip the Thread.CurrentThread lookup in ExecutionContext.RunInternal and use the shorter ExecutionContext.RunFromThreadPoolDispatchLoop(Thread threadPoolThread, ...) overload; where it gets passed the current thread and stores less as it knows it starts on the default context #20308

by minimizing the number of places we use IAsyncStateMachineBox and instead referring to it in various places, in particular here, as a Task

Instead of doing:

if (ReferenceEquals(callBack, ThreadPoolGlobals.s_invokeAsyncStateMachineBox)) { if (!(state is IAsyncStateMachineBox)) { ThrowHelper.ThrowArgumentOutOfRangeException(ExceptionArgument.state);

it could do

if (ReferenceEquals(callBack, ThreadPoolGlobals.s_invokeAsyncStateMachineBox)) { if (!(state is Task)) { ThrowHelper.ThrowArgumentOutOfRangeException(ExceptionArgument.state);

Then its only the sentinel/off-threadpool action s_invokeAsyncStateMachineBox that both ThreadPool.UnsafeQueueUserWorkItem and ValueTaskAwaiter need to know about.

Could add it to Task instead, so its Task.s_invokeAsyncStateMachineBox rather than ThreadPoolGlobals.s_invokeAsyncStateMachineBox?

This work? 281b398 (last commit) then ThreadPool no longer knows about IAsyncStateMachineBox

This work?

I suggested "But that's not for this PR" because there are other issues to discuss around the Task approach, e.g. this change let's you queue arbitrary tasks to the thread pool.

I suggested "But that's not for this PR"

Removed

e.g. this change let's you queue arbitrary tasks to the thread pool.

ish; you'd still need to provide the internal sentinel Action<object>. You are given it as a parameter so could capture there, which would be the main problem?

Or you could use reflection to get it; but then you could also get the UnsafeQueueUserWorkItemInternal method...

You are given it as a parameter so could capture there, which would be the main problem?

Right, that's the concern. At least to think about. We could decide it's too far fetched to worry about.

benaadams deleted the ValueTaskAwaiter-ThreadPool branch

Sorry has taken so long, had a bit of a hard time getting numbers and getting the versions of coreclr and System.IO.Pipelines to match up without getting overriden with different versions when BDN ran and having one part of the feature missing: https://github.com/dotnet/corefx/issues/33798#issuecomment-445511939

I have a suspicion the interface validation after the interface check is expensivestate is IAsyncStateMachineBox; but it looks to be balanced by it then moving to a Task virtual call for execution and the faster EC path.

https://gist.github.com/benaadams/932aef29a62f2e6fd5b00fb5cb85de21#file-quwi_pipelines-cs-L56-L85

Method | Chunks | Length | Mean |-------------------- |------- |------- |-----------:|

- Parse_ParallelAsync | 1 | 128 | 644.6 ns |

- Parse_ParallelAsync | 1 | 128 | 563.9 ns |

- Parse_ParallelAsync | 1 | 256 | 656.5 ns |

- Parse_ParallelAsync | 1 | 256 | 584.7 ns |

- Parse_ParallelAsync | 1 | 1024 | 728.5 ns |

- Parse_ParallelAsync | 1 | 1024 | 664.8 ns |

- Parse_ParallelAsync | 1 | 2048 | 866.6 ns |

- Parse_ParallelAsync | 1 | 2048 | 824.0 ns |

- Parse_ParallelAsync | 1 | 4096 | 1,120.2 ns |

- Parse_ParallelAsync | 1 | 4096 | 1,052.0 ns |

- Parse_ParallelAsync | 2 | 128 | 1,089.9 ns |

- Parse_ParallelAsync | 2 | 128 | 1,028.1 ns |

- Parse_ParallelAsync | 2 | 256 | 1,059.6 ns |

- Parse_ParallelAsync | 2 | 256 | 993.6 ns |

- Parse_ParallelAsync | 2 | 1024 | 1,133.7 ns |

- Parse_ParallelAsync | 2 | 1024 | 1,045.6 ns |

- Parse_ParallelAsync | 2 | 2048 | 1,168.1 ns |

- Parse_ParallelAsync | 2 | 2048 | 1,151.0 ns |

- Parse_ParallelAsync | 2 | 4096 | 1,344.0 ns |

- Parse_ParallelAsync | 2 | 4096 | 1,403.9 ns |

- Parse_ParallelAsync | 4 | 128 | 1,663.0 ns |

- Parse_ParallelAsync | 4 | 128 | 1,598.0 ns |

- Parse_ParallelAsync | 4 | 256 | 1,689.2 ns |

- Parse_ParallelAsync | 4 | 256 | 1,637.0 ns |

- Parse_ParallelAsync | 4 | 1024 | 1,725.4 ns |

- Parse_ParallelAsync | 4 | 1024 | 1,594.8 ns |

- Parse_ParallelAsync | 4 | 2048 | 1,761.2 ns |

- Parse_ParallelAsync | 4 | 2048 | 1,652.5 ns |

- Parse_ParallelAsync | 4 | 4096 | 1,925.5 ns |

- Parse_ParallelAsync | 4 | 4096 | 1,862.5 ns |

- Parse_ParallelAsync | 8 | 128 | 2,908.4 ns |

- Parse_ParallelAsync | 8 | 128 | 2,632.0 ns |

- Parse_ParallelAsync | 8 | 256 | 2,857.4 ns |

- Parse_ParallelAsync | 8 | 256 | 2,743.7 ns |

- Parse_ParallelAsync | 8 | 1024 | 3,079.3 ns |

- Parse_ParallelAsync | 8 | 1024 | 2,648.3 ns |

- Parse_ParallelAsync | 8 | 2048 | 2,912.5 ns |

- Parse_ParallelAsync | 8 | 2048 | 2,753.2 ns |

- Parse_ParallelAsync | 8 | 4096 | 3,060.1 ns |

- Parse_ParallelAsync | 8 | 4096 | 2,877.9 ns |

Note: isn't actually Parsing its just advancing the reader; so the improvement is mostly just measuring it in terms of the mechanism.

picenka21 pushed a commit to picenka21/runtime that referenced this pull request

Queue ValueTaskAwaiter IAsyncStateMachineBox directly to ThreadPool

Invert the dependency

Move to UnsafeQueueUserWorkItem

MRVTSC queue null or Deafult EC to UnsafeQUWI

Revert MRVTSC change

Add comment and validation

Use s_invokeAsyncStateMachineBox for AsTask

nits

nits 2

Rever ValueTask

nits

Commit migrated from dotnet/coreclr@e7ead79