augmented reality (AR) (original) (raw)

What is augmented reality (AR)?

Augmented reality (AR) is the integration of digital information with the user's environment in real time. Unlike virtual reality (VR), which creates a totally artificial environment, AR users experience a real-world environment with generated perceptual information overlaid on top of it.

Augmented reality has a variety of uses, from assisting in the decision-making process to entertainment. AR is used to either visually change natural environments in some way or to provide additional information to users. The primary benefit of AR is that it manages to blend digital and three-dimensional (3D) components with an individual's perception of the real world.

AR delivers visual elements, sound and other sensory information to the user through a device like a smartphone, glasses or a headset. This information is overlaid onto the device to create an interwoven and immersive experience where digital information alters the user's perception of the physical world. The overlaid information can be added to an environment or mask part of the natural environment.

Boeing Computer Services, Research and Technology employee Thomas Caudell coined the term augmented reality in 1990 to describe how the head-mounted displays that electricians use when assembling complicated wiring harnesses worked. One of the first commercial applications of augmented reality technology was the yellow first-down marker that began appearing in televised football games in 1998.

Today, smartphone games, mixed-reality headsets and heads-up displays (HUDs) in car windshields are the most well-known consumer AR products. But AR technology is also being used in many industries, including healthcare, public safety, gas and oil, tourism and marketing.

How does augmented reality work?

Augmented reality is deliverable in a variety of formats, including within smartphones, glasses and headsets. AR contact lenses are also in development. The technology requires hardware components, such as a processor, sensors, a display and input devices. Mobile devices, like smartphones and tablets, already have this hardware onboard, making AR more accessible to the everyday user. Mobile devices typically contain sensors, including cameras, accelerometers, Global Positioning System (GPS) instruments and solid-state compasses. For AR applications on smartphones, for example, GPS is used to pinpoint the user's location, and its compass is used to detect device orientation.

Sophisticated AR programs, such as those used by the military for training, might also include machine vision, object recognition and gesture recognition. AR can be computationally intensive, so if a device lacks processing power, data processing can be offloaded to a different machine.

Augmented reality apps work using either marker-based or markerless methods. Marker-based AR applications are written in special 3D programs that let developers tie animation or contextual digital information into the computer program to an augmented reality marker in the real world. When a computing device's AR app or browser plugin receives digital information from a known marker, it begins to execute the marker's code and layer the correct image or images.

Markerless AR is more complex. The AR device doesn't focus on a specific point, so the device must recognize items as they appear in view. This type of AR requires a recognition algorithm that detects nearby objects and determines what they are. Then, using the onboard sensors, the device can overlay images within the user's environment.

Differences between AR and VR

VR is a virtual environment created with software and presented to users in such a way that their brain suspends belief long enough to accept a virtual world as a real environment. Virtual reality is primarily experienced through a headset with sight and sound.

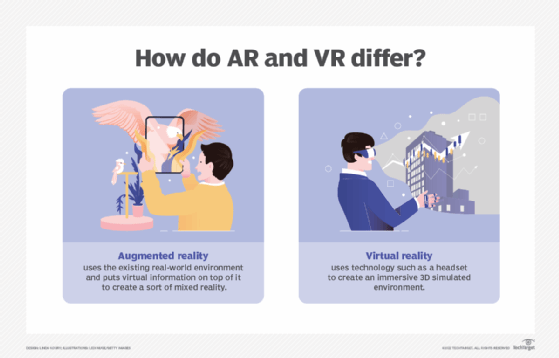

The biggest difference between AR and VR is that augmented reality uses the existing real-world environment and puts virtual information on top of it, whereas VR completely immerses users in a virtually rendered environment.

The devices used to accomplish this are also different. VR uses VR headsets that fit over the user's head and present them with simulated audiovisual information. AR devices are less restrictive and typically include devices like phones, glasses, projections and HUDs.

In VR, people are placed inside a 3D environment in which they can move around and interact with the generated environment. AR, however, keeps users grounded in the real-world environment, overlaying virtual data as a visual layer within the environment. So, for example, while VR places a user in a simulated environment, VR could overlay a web browser in front of the user in their living room. For spatial computing headsets, like Apple Vision Pro or Meta Quest 3, where the device is blocking the user's natural vision, a technique called passthrough is used. Here, the headset mirrors what the device's front-facing cameras see on the headset's display.

While VR simulates an environment for the user, AR overlays data over the real world.

Although it can be interchanged with AR, the term mixed reality refers to a virtual display over a real-world environment with which users can interact. For example, Apple Vision Pro can project a virtual keyboard that the wearer can use to type. The key difference between mixed reality and AR is the user's ability to interact with the digital display.

Top AR use cases

AR can be used in the following ways, among others:

- Retail. Consumers can use a store's online app to see how products, such as furniture, will look in their own homes before buying.

- Entertainment and gaming. AR can be used to overlay a video game in the real world or enable users to animate their faces in different and creative ways on social media.

- Navigation. A user can overlay a route to their destination over a live view of a road. AR used for navigation can also display information about local businesses in the user's immediate surroundings.

- Tools and measurement. Mobile devices can use AR to measure different 3D points in the user's environment.

- Art and architecture. AR can help artists visualize or work on a project.

- Military. Data can be displayed on a vehicle's windshield, indicating destination directions, distances, weather and road conditions.

- Archaeology. AR aids archaeological research by helping archeologists reconstruct sites. 3D models help museum visitors and future archeologists experience an excavation site as if they were there.

Examples of AR

Examples of AR include the following:

- Target app. The Target retail app feature See it in Your Space lets users take a photo of a space in their home and digitally view an object, such as a picture on the wall or a chair, to see how it will look there.

- Apple Measure app. The Measure app on Apple iOS acts like a tape measure by letting users select two or more points in their environment and measure the distance between them.

- Snapchat. Snapchat can overlay a filter or mask over the user's video or picture.

- Pokemon Go. Pokemon Go is a popular mobile AR game that uses the player's GPS sensors to detect where Pokemon creatures appear in the user's surrounding environment for them to catch.

- Google Glass. Google Glass was Google's first commercial attempt at a glasses-based AR system. This small wearable computer, which was discontinued in 2023, let users work hands-free. Companies such as DHL and DB Schenker used Google Glass and third-party software to help frontline workers in global supply chain logistics and customized shipping.

- U.S. Army Tactical Augmented Reality (TAR). The U.S. Army uses AR in an eyepiece called TAR. TAR mounts onto the soldier's helmet and aids in locating another soldier's position.

- Apple Vision Pro. Apple Vision Pro is a spatial computing device that offers AR, VR and mixed-reality features. It live-maps a user's environment, and it offers passthrough and the ability to pin projections like web browsing windows to specific places in the user's environment. Users can control the device using gestures.

- Meta Quest 3. Meta Quest 3 is a mixed-reality headset that offers many similar features as Apple Vision Pro, including passthrough and productivity features. Users control this headset through gestures or controllers.

A side profile of Apple Vision Pro and its battery pack

Future of AR technology

AR technology is growing steadily as the popularity and familiarization of apps and games like Pokemon Go or retail store AR apps increase.

Apple continues to develop and update its open source mobile augmented reality development tool set, ARKit. Companies, including Target and Ikea, use ARKit in their flagship AR shopping apps for iPhone and iPad. ARKit 6, for example, enables the rendering of AR in high dynamic range 4K and improves image and video capture. It also provides a Depth API, which uses per-pixel depth information to help a device's camera understand the size and shape of an object. It includes scene geometry that creates a topological map of a space along with other features.

ARCore, Google's platform for building AR experiences on Andriod and iOS, continues to evolve and improve. For example, ARCore uses a geospatial API that sources data from Google Earth 3D models and Street View image data from Google Maps. Similar to ARKit's Depth API, ARCore has improved its Depth API, optimizing it for longer-range depth sensing.

Improved AR, VR and mixed-reality headsets are also being released. For example, Meta improved its Quest 2 headset with Meta Quest 3, which was released in October 2023. This new headset is slimmer, lighter and more ergonomic than Quest 2.

In February 2024, Apple released Apple Vision Pro, bringing more competition to the AR and VR headset market. Vision Pro is targeted at early adopters and developers at a much higher price point than Quest 3. Meta Platforms is pursuing a wider audience at a 499pricepoint,whileAppleispricingVisionProatabout499 price point, while Apple is pricing Vision Pro at about 499pricepoint,whileAppleispricingVisionProatabout3,499. It's expected that Apple will produce a non-Pro variant of its headset at a more affordable price in the future. Developers of Apple Vision Pro will have to work with the visionOS software development kit. However, they can still use familiar Apple tools, such as ARKit, SwiftUI or RealityKit to build apps.

Other potential future advancements for AR include the following:

- More powerful and lighter devices.

- The use of artificial intelligence for face and room scanning, object detection and labeling, as well as for text recognition.

- The expansion of 5G networks that could make it easier to support cloud-based AR experiences by providing AR applications with higher data speeds and lower latency.

AR, VR and mixed-reality technologies are being used in various industries. Learn how each of these technologies differ.

This was last updated in March 2024