AI vs. machine learning vs. deep learning: Key differences (original) (raw)

The terms artificial intelligence, machine learning and deep learning are often used interchangeably, but they aren't the same. Understand the differences and how they're used.

Artificial intelligence, machine learning and deep learning are popular terms in enterprise IT sometimes used interchangeably, particularly when companies are marketing their products. The terms, however, aren't synonymous; there are important distinctions.

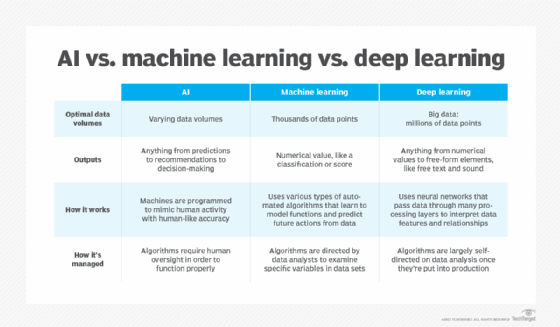

AI refers to the simulation of human intelligence by machines. It has an ever-changing definition. As new technologies are created to simulate humans, the capabilities and limitations of AI are revisited.

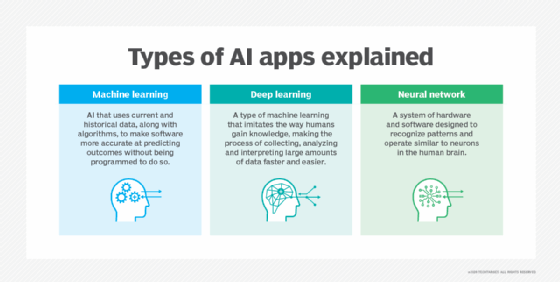

ML is a subset of artificial intelligence, deep learning is a subset of ML, and neural networks is a subset of deep learning.

To better understand the relationship between the different technologies, here's a primer on artificial intelligence vs. machine learning vs. deep learning.

What is artificial intelligence?

The term AI has been around since the 1950s. It depicts our struggle to build machines that can challenge what made humans the dominant lifeform on the planet: their intelligence. However, defining intelligence is tricky because what's perceived as intelligent changes over time.

Early AI systems were rule-based computer programs that could solve somewhat complex problems. Instead of hardcoding every decision the software was supposed to make, the program was divided into a knowledge base and an inference engine. Developers filled out the knowledge base with facts, and the inference engine then queried those facts to get results.

This type of AI was limited because it relied heavily on human intervention and input. Rule-based systems lack the flexibility to learn and evolve, and they're hardly considered intelligent anymore.

Modern types of AI and AI algorithms learn from historical data. This makes them useful for applications such as robotics, self-driving cars, power grid optimization and natural language understanding (NLU). While AI sometimes yields superhuman performance in these fields, it still has a way to go before it competes with human intelligence.

For now, AI can't learn the way humans do -- that is, with just a few examples. AI must be trained on huge amounts of data to understand a topic. Algorithms can't transfer their understanding of one domain to another. For instance, people who learn a game such as StarCraft can quickly learn to play StarCraft II. But for AI, StarCraft II is a whole new world; it must learn each game from scratch.

Human intelligence can link meanings. For instance, consider the word human. People can identify humans in pictures and videos. AI has also gained that capability. But people also know what to expect from humans. They never expect a human to have four wheels and emit carbon like a car. An AI system, on the other hand, can't figure this out unless trained on a lot of data.

AI's definition is a moving target. For instance, optical character recognition used to be considered advanced AI, but it no longer is. However, a deep learning algorithm trained on thousands of handwriting examples that can convert those to text is considered advanced by today's definition.

People were amazed when AI algorithms got so sophisticated that they outperformed expert human radiologists. But later we learned about their limitations. That's why we now distinguish between the narrow or weak AI and the human-level version of AI that computer and data scientists are now pursuing: artificial general intelligence (AGI). Every AI application that exists falls under narrow AI, while AGI is a theoretical goal.

Machine learning, deep learning and neural networks have key differences.

How businesses use AI

Businesses across various vertical markets use general-purpose AI. Different algorithms are suited to different tasks as follows:

- Communication. Generative AI transformer models are used to generate text, automate emails and phone call responses. For example, natural language processing (NLP) techniques such as text classification and sentiment analysis let AI systems understand incoming email content or phone conversations. These systems then come up with appropriate responses based on their training data.

- Customer service. AI-powered chatbots are more advanced than traditional, static chatbots with predetermined responses. AI chatbots analyze users' contexts and use NLP to generate responses to their queries and concerns.

- Coding. Transformer models also automate menial coding tasks to assist software and web developers. This form of generative AI simplifies tedious coding processes, such as debugging.

- Manufacturing. General-purpose AI is used to automate various manufacturing processes as well as powering robots or collaborative robots (cobots) in factories.

Transformer neural networks are the algorithms powering transformer models. The GPT and BERT models are used to generate and summarize text. Codex is used for code generation. These neural networks are trained on vast data sets of human language or code. They recognize the meanings of user inputs and generate appropriate outputs.

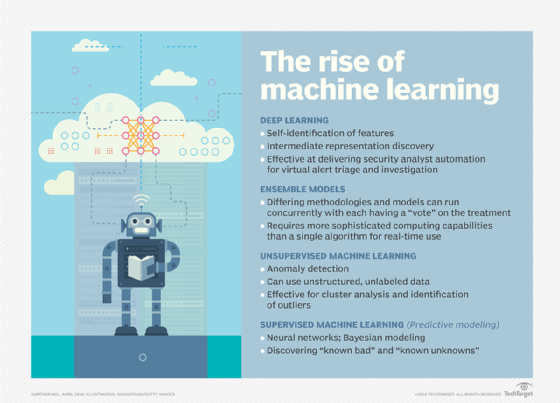

What is machine learning?

Machine learning is a subset of AI; it's one of the AI algorithms we've developed to mimic human intelligence. ML is an advancement on symbolic AI, also known as "good old-fashioned" AI, which is based on rule-based systems that use if-then conditions.

The advent of ML marked a turning point in AI development. Before ML, we tried to teach computers all the variables of every decision they had to make. This made the process fully visible, and the algorithm could take care of many complex scenarios.

In its most complex form, the AI would traverse several decision branches and find the one with the best results. That is how IBM's Deep Blue was designed to beat Garry Kasparov at chess.

But there are many things we can't define via rule-based algorithms, like facial recognition. A rule-based system would need to detect different shapes, such as circles, then determine how they're positioned and within what other objects so that it would constitute an eye. Even more daunting for programmers would be how to code for detecting a nose.

Machine learning models take a different approach. They let the machines learn independently, ingesting vast amounts of labeled data and unlabeled data to detect patterns. Many ML algorithms use statistics formulas and big data to function. Advancements in big data and the vast data we have collected enabled machine learning in the first place.

Some of the machine learning algorithms used for classification and regression include linear regression, logistic regression, decision trees, support vector machines, Naive Bayes, k-nearest neighbors, k-means, random forest and dimensionality reduction algorithms.

Linear regressions excel at predicting future variables, and logistic regressions excel at classification tasks. Other algorithms can be used for both prediction and classification. For example, a decision tree can examine features within input data to determine which branch in its tree the data fits into. That branch would be the most likely future event or classification.

As machine learning makes its way into mainstream use, it's important to understand new terminology and concepts.

How businesses use ML

Machine learning efficiently analyzes large data sets with potentially millions of data points. These models perform various large-scale tasks, such as predictive analysis, image and speech recognition, and other classification tasks more efficiently than people.

ML is used to analyze large data sets to identify patterns and make predictions. Algorithms for predicting future variables such as linear regression are suitable for these tasks. Examples of businesses using the predictive power of ML include the following:

- Retail product recommendation engines. Retail or e-commerce companies use a ML model called a product recommendation engine. This ML model analyzes a customer's purchasing and browsing history and then recommends products or services most relevant to them.

- Weather forecasting. An organization or agency that predicts future weather patterns and conditions relies on ML to analyze large data sets of historical weather patterns.

- Inventory management. Warehouses conduct inventory management using ML. They analyze consumer preferences, stock shortages and surpluses to predict items that will need to be stocked and ones that are low priority.

Classification is another common way ML is used. Logistic regression algorithms and convolutional neural networks (CNNs) are ideal for classification tasks, particularly binary ones. Practical uses of ML for classification of unstructured data, such as text and images. Examples include the following:

- Spam detection. Organizations use ML-powered filters to classify emails as authentic or spam. These filters are trained on data sets comprised of both authentic and spam emails, so they learn to examine the features of each email to make their determinations.

- Image recognition. Large numbers of images are examined and classified according to specific criteria, such as whether they're real or fake. CNNs are trained using example images, enabling them to learn and analyze features associated with different categories.

ML algorithms train machines, such as robots or cobots, to perform production line tasks. For example, a reinforcement learning algorithm rewards correct actions and discourages incorrect ones.

What is deep learning?

Deep learning is a subset of machine learning that uses complex neural networks to replicate human intelligence. The idea of building AI based on neural networks has been around since the 1980s, but it wasn't until 2012 that deep learning got traction. While machine learning was predicated on the vast amounts of data being produced at the time, deep learning owes its adoption to the cheaper computing power that became available as well as advancements in algorithms.

Deep learning enabled smarter results than were originally possible with ML. Consider the complex considerations that go into learning facial recognition. To detect a face, AI needs specific labeled data on facial features to learn what to look for. Deep learning makes use of layers of information processing, each gradually learning more complex representations of data. The early layers might learn about colors, the next ones about shapes, the following ones about combinations of those shapes, and the final layers about actual objects.

Deep learning demonstrated a breakthrough in object recognition. Its invention quickly advanced AI on several fronts, including NLU. It's currently the most sophisticated AI architecture available. Deep learning algorithms include CNNs, recurrent neural networks, long short-term memory networks, deep belief networks and generative adversarial networks.

How businesses use deep learning

Deep neural networks are highly advanced algorithms that analyze enormous data sets with potentially billions of data points. Deep learning algorithms make better use of large data sets than ML algorithms. Applications that use deep learning include facial recognition systems, self-driving cars and deepfake content.

Business real-world use cases of deep learning include the following:

- Cybersecurity and fraud prevention. Large enterprises protecting sensitive data use neural networks for anomaly detection and to prevent unauthorized access. For example, financial institutions use large sets of historical financial data to train neural networks to identify normal transaction patterns. Once trained, they can detect fraudulent activity when situations and patterns arise that deviate from normal transaction data.

- Predictive maintenance. In manufacturing, predictive maintenance must be done continuously. Deep learning techniques are used to analyze equipment performance data to detect signs of potential failure before it occurs.

- Healthcare and disease prevention. Healthcare providers use deep learning to large amounts of data points in patient data to detect patterns and correlations that indicate signs of diseases. This assists healthcare providers with identifying and preventing diseases.

AI, machine learning and deep learning have different attributes and uses.

AI, ML and deep learning: Differences and similarities

Machine learning and deep learning both represent milestones in AI's evolution. Both require advanced hardware to run, like high-end GPUs and access to a lot of power. However, deep learning models typically learn faster and are more autonomous than ML models.

Aside from these differences, AI, machine learning and deep learning have the following similarities:

- They contribute to the creation of intelligent machines.

- They can more easily solve today's complex problems than older programming methodologies.

- They rely on algorithms to make predictions, discern important patterns in data and carry out tasks.

All three disciplines use data for training models. Models are fed data sets to analyze and learn important information like insights or patterns. In learning from experience, they eventually become high-performance models.

Data quality and diversity are important factors in each form of AI. Diverse data sets mitigate inherent biases embedded in the training data that can lead to skewed outputs. High-quality data minimizes errors to ensure models are reliable. Like humans, an AI model must learn iteratively to improve its predictive, problem-solving and decision-making capabilities over time.

Editor's note: David Petersson wrote this feature, and Cameron Hashemi-Pour revised and added to it to include information on AI, ML and deep learning uses and similarities.

Cameron Hashemi-Pour is a technology writer for WhatIs. Before joining TechTarget, he graduated from the University of Massachusetts Dartmouth and received his Master of Fine Arts degree in professional writing/communications. He then worked at Context Labs BV, a software company based in Cambridge, Mass., as a technical editor.

David Petersson is a developer and freelance writer who covers various technology topics, from cybersecurity and artificial intelligence to hacking and blockchain. David tries to identify the intersection of technology and human life as well as how it affects the future.

Next Steps

Top degree programs for studying artificial intelligence

AI transparency: What is it and why do we need it?

AI risks businesses must confront and how to address them

AI regulation: What businesses need to know

Top AI and machine learning trends

What is deep learning and how does it work?

What is deep learning and how does it work?  By: Kinza Yasar

By: Kinza Yasar  What is machine learning? Guide, definition and examples

What is machine learning? Guide, definition and examples  By: Lev Craig

By: Lev Craig  What is adversarial machine learning? By: Cameron Hashemi-Pour

What is adversarial machine learning? By: Cameron Hashemi-Pour  Machine learning vs. neural networks: What's the difference?

Machine learning vs. neural networks: What's the difference?  By: Stephen Bigelow

By: Stephen Bigelow