importONNXLayers - (To be removed) Import layers from ONNX network - MATLAB (original) (raw)

(To be removed) Import layers from ONNX network

Syntax

Description

[lgraph](#mw%5F42825c2b-c577-4625-a30f-302d6168f528) = importONNXLayers([modelfile](#mw%5F195398bb-0f98-4e25-956a-a9ab6be9fb28%5Fsep%5Fmw%5Fbf0bcf87-a070-41f3-ba07-d8b9b2345e44)) imports the layers and weights of a pretrained ONNX™ (Open Neural Network Exchange) network from the filemodelfile. The function returns lgraph as aLayerGraph object compatible with a DAGNetwork ordlnetwork object.

importONNXLayers requires the Deep Learning Toolbox™ Converter for ONNX Model Format support package. If this support package is not installed, thenimportONNXLayers provides a download link.

Note

By default, importONNXLayers tries to generate a custom layer when the software cannot convert an ONNX operator into an equivalent built-in MATLAB® layer. For a list of operators for which the software supports conversion, see ONNX Operators Supported for Conversion into Built-In MATLAB Layers.

importONNXLayers saves the generated custom layers in the namespace+`modelfile`.

importONNXLayers does not automatically generate a custom layer for each ONNX operator that is not supported for conversion into a built-in MATLAB layer. For more information on how to handle unsupported layers, see Tips.

[lgraph](#mw%5F42825c2b-c577-4625-a30f-302d6168f528) = importONNXLayers([modelfile](#mw%5F195398bb-0f98-4e25-956a-a9ab6be9fb28%5Fsep%5Fmw%5Fbf0bcf87-a070-41f3-ba07-d8b9b2345e44),[Name=Value](#namevaluepairarguments)) imports the layers and weights from an ONNX network with additional options specified by one or more name-value arguments. For example, OutputLayerType="classification" imports a layer graph compatible with a DAGNetwork object, with a classification output layer appended to the end of the first output branch of the imported network architecture.

Examples

Download and install the Deep Learning Toolbox Converter for ONNX Model Format support package.

Type importONNXLayers at the command line.

If Deep Learning Toolbox Converter for ONNX Model Format is not installed, then the function provides a link to the required support package in the Add-On Explorer. To install the support package, click the link, and then click Install. Check that the installation is successful by importing the network from the model file "simplenet.onnx" at the command line. If the support package is installed, then the function returns a LayerGraph object.

modelfile = "simplenet.onnx"; lgraph = importONNXLayers(modelfile)

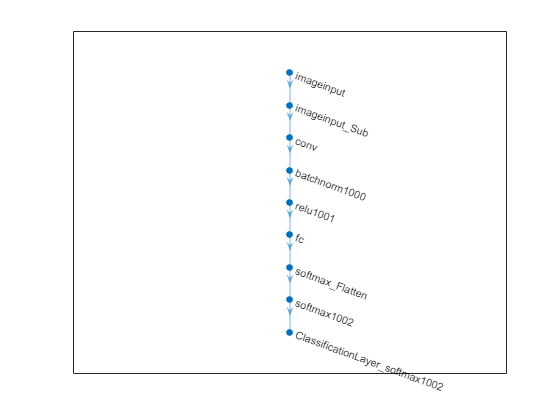

lgraph = LayerGraph with properties:

Layers: [9×1 nnet.cnn.layer.Layer]

Connections: [8×2 table]

InputNames: {'imageinput'}

OutputNames: {'ClassificationLayer_softmax1002'}Plot the network architecture.

Import a pretrained ONNX network as a LayerGraph object. Then, assemble the imported layers into a DAGNetwork object, and use the assembled network to classify an image.

Generate an ONNX model of the squeezenet convolution neural network.

squeezeNet = squeezenet; exportONNXNetwork(squeezeNet,"squeezeNet.onnx");

Specify the model file and the class names.

modelfile = "squeezenet.onnx"; ClassNames = squeezeNet.Layers(end).Classes;

Import the layers and weights of the ONNX network. By default, importONNXLayers imports the network as a LayerGraph object compatible with a DAGNetwork object.

lgraph = importONNXLayers(modelfile)

lgraph = LayerGraph with properties:

Layers: [70×1 nnet.cnn.layer.Layer]

Connections: [77×2 table]

InputNames: {'data'}

OutputNames: {'ClassificationLayer_prob'}Analyze the imported network architecture.

Display the last layer of the imported network. The output shows that the layer graph has a ClassificationOutputLayer at the end of the network architecture.

ans = ClassificationOutputLayer with properties:

Name: 'ClassificationLayer_prob'

Classes: 'auto'

ClassWeights: 'none'

OutputSize: 'auto'Hyperparameters LossFunction: 'crossentropyex'

The classification layer does not contain the classes, so you must specify these before assembling the network. If you do not specify the classes, then the software automatically sets the classes to 1, 2, ..., N, where N is the number of classes.

The classification layer has the name 'ClassificationLayer_prob'. Set the classes to ClassNames, and then replace the imported classification layer with the new one.

cLayer = lgraph.Layers(end); cLayer.Classes = ClassNames; lgraph = replaceLayer(lgraph,'ClassificationLayer_prob',cLayer);

Assemble the layer graph using assembleNetwork to return a DAGNetwork object.

net = assembleNetwork(lgraph)

net = DAGNetwork with properties:

Layers: [70×1 nnet.cnn.layer.Layer]

Connections: [77×2 table]

InputNames: {'data'}

OutputNames: {'ClassificationLayer_prob'}Read the image you want to classify and display the size of the image. The image is 384-by-512 pixels and has three color channels (RGB).

I = imread("peppers.png"); size(I)

Resize the image to the input size of the network. Show the image.

I = imresize(I,[227 227]); imshow(I)

Classify the image using the imported network.

label = categorical bell pepper

Import a pretrained ONNX network as a LayerGraph object compatible with a dlnetwork object. Then, convert the layer graph to a dlnetwork to classify an image.

Generate an ONNX model of the squeezenet convolution neural network.

squeezeNet = squeezenet; exportONNXNetwork(squeezeNet,"squeezeNet.onnx");

Specify the model file and the class names.

modelfile = "squeezenet.onnx"; ClassNames = squeezeNet.Layers(end).Classes;

Import the layers and weights of the ONNX network. Specify to import the network as a LayerGraph object compatible with a dlnetwork object.

lgraph = importONNXLayers(modelfile,TargetNetwork="dlnetwork")

lgraph = LayerGraph with properties:

Layers: [70×1 nnet.cnn.layer.Layer]

Connections: [77×2 table]

InputNames: {'data'}

OutputNames: {1×0 cell}Read the image you want to classify and display the size of the image. The image is 384-by-512 pixels and has three color channels (RGB).

I = imread("peppers.png"); size(I)

Resize the image to the input size of the network. Show the image.

I = imresize(I,[227 227]); imshow(I)

Convert the imported layer graph to a dlnetwork object.

dlnet = dlnetwork(lgraph);

Convert the image to a dlarray. Format the images with the dimensions "SSCB" (spatial, spatial, channel, batch). In this case, the batch size is 1 and you can omit it ("SSC").

I_dlarray = dlarray(single(I),"SSCB");

Classify the sample image and find the predicted label.

prob = predict(dlnet,I_dlarray); [~,label] = max(prob);

Display the classification result.

ans = categorical bell pepper

Import an ONNX long short-term memory (LSTM) network as a layer graph, and then find and replace the placeholder layers. An LSTM network enables you to input sequence data into a network, and make predictions based on the individual time steps of the sequence data.

lstmNet has a similar architecture to the LSTM network created in Sequence Classification Using Deep Learning. lstmNet is trained to recognize the speaker given time series data representing two Japanese vowels spoken in succession.

Specify lstmNet as the model file.

modelfile = "lstmNet.onnx";

Import the layers and weights of the ONNX network. By default, importONNXLayers imports the network as a LayerGraph object compatible with a DAGNetwork object.

lgraph = importONNXLayers("lstmNet.onnx")

Warning: Unable to import some ONNX operators, because they are not supported. They have been replaced by placeholder layers. To find these layers, call the function findPlaceholderLayers on the returned object.

1 operators(s) : Unable to create an output layer for the ONNX network output 'softmax1001' because its data format is unknown or unsupported by MATLAB output layers. If you know its format, pass it using the 'OutputDataFormats' argument.

To import the ONNX network as a function, use importONNXFunction.

lgraph = LayerGraph with properties:

Layers: [6×1 nnet.cnn.layer.Layer]

Connections: [5×2 table]

InputNames: {'sequenceinput'}

OutputNames: {1×0 cell}importONNXLayers displays a warning and inserts a placeholder layer for the output layer.

You can check for placeholder layers by viewing the Layers property of lgraph or by using the findPlaceholderLayers function.

ans = 6×1 Layer array with layers:

1 'sequenceinput' Sequence Input Sequence input with 12 dimensions

2 'lstm1000' LSTM LSTM with 100 hidden units

3 'fc_MatMul' Fully Connected 9 fully connected layer

4 'fc_Add' Elementwise Affine Applies an elementwise scaling followed by an addition to the input.

5 'Flatten_To_SoftmaxLayer1005' lstmNet.Flatten_To_SoftmaxLayer1005 lstmNet.Flatten_To_SoftmaxLayer1005

6 'OutputLayer_softmax1001' PLACEHOLDER LAYER Placeholder for 'added_outputLayer' ONNX operatorplaceholderLayers = findPlaceholderLayers(lgraph)

placeholderLayers = PlaceholderLayer with properties:

Name: 'OutputLayer_softmax1001'

ONNXNode: [1×1 struct]

Weights: []Learnable Parameters No properties.

State Parameters No properties.

Show all properties

Create an output layer to replace the placeholder layer. First, create a classification layer with the name OutputLayer_softmax1001. If you do not specify the classes, then the software automatically sets them to 1, 2, ..., N, where N is the number of classes. In this case, the class data is a categorical vector of labels "1","2",..."9", which correspond to nine speakers.

outputLayer = classificationLayer('Name','OutputLayer_softmax1001');

Replace the placeholder layers with outputLayer by using the replaceLayer function.

lgraph = replaceLayer(lgraph,'OutputLayer_softmax1001',outputLayer);

Display the Layers property of the layer graph to confirm the replacement.

ans = 6×1 Layer array with layers:

1 'sequenceinput' Sequence Input Sequence input with 12 dimensions

2 'lstm1000' LSTM LSTM with 100 hidden units

3 'fc_MatMul' Fully Connected 9 fully connected layer

4 'fc_Add' Elementwise Affine Applies an elementwise scaling followed by an addition to the input.

5 'Flatten_To_SoftmaxLayer1005' lstmNet.Flatten_To_SoftmaxLayer1005 lstmNet.Flatten_To_SoftmaxLayer1005

6 'OutputLayer_softmax1001' Classification Output crossentropyexAlternatively, define the output layer when you import the layer graph by using the OutputLayerType or OutputDataFormats option. Check if the imported layer graphs have placeholder layers by using findPlaceholderLayers.

lgraph1 = importONNXLayers("lstmNet.onnx",OutputLayerType="classification"); findPlaceholderLayers(lgraph1)

ans = 0×1 Layer array with properties:

lgraph2 = importONNXLayers("lstmNet.onnx",OutputDataFormats="BC"); findPlaceholderLayers(lgraph2)

ans = 0×1 Layer array with properties:

The imported layer graphs lgraph1 and lgraph2 do not have placeholder layers.

Input Arguments

Name of the ONNX model file containing the network, specified as a character vector or string scalar. The file must be in the current folder or in a folder on the MATLAB path, or you must include a full or relative path to the file.

Example: "cifarResNet.onnx"

Name-Value Arguments

Specify optional pairs of arguments asName1=Value1,...,NameN=ValueN, where Name is the argument name and Value is the corresponding value. Name-value arguments must appear after other arguments, but the order of the pairs does not matter.

Example: importONNXLayers(modelfile,TargetNetwork="dagnetwork",GenerateCustomLayers=true,Namespace="CustomLayers") imports the network layers from modelfile as a layer graph compatible with a DAGNetwork object and saves the automatically generated custom layers in the namespace +CustomLayers in the current folder.

Option for custom layer generation, specified as a numeric or logical 1 (true) or 0 (false). If you set GenerateCustomLayers to true,importONNXLayers tries to generate a custom layer when the software cannot convert an ONNX operator into an equivalent built-in MATLAB layer. importONNXLayers saves each generated custom layer to a separate .m file in+[Namespace](#mw%5F195398bb-0f98-4e25-956a-a9ab6be9fb28%5Fsep%5Fmw%5Fa36c5ab1-de33-4d97-a738-f615ec8855d4). To view or edit a custom layer, open the associated .m file. For more information on custom layers, see Custom Layers.

Example: GenerateCustomLayers=false

Name of the custom layers namespace in which importONNXLayers saves custom layers, specified as a character vector or string scalar.importONNXLayers saves the custom layers namespace+`Namespace` in the current folder. If you do not specify Namespace, then importONNXLayers saves the custom layers in a namespace named+[modelfile](#mw%5F195398bb-0f98-4e25-956a-a9ab6be9fb28%5Fsep%5Fmw%5Fbf0bcf87-a070-41f3-ba07-d8b9b2345e44) in the current folder. For more information about namespaces, see Create Namespaces.

Example: Namespace="shufflenet_9"

Example: Namespace="CustomLayers"

Target type of Deep Learning Toolbox network for imported network architecture, specified as"dagnetwork" or "dlnetwork". The functionimportONNXLayers imports the network architecture as a LayerGraph object compatible with a DAGNetwork ordlnetwork object.

- If you specify

TargetNetworkas"dagnetwork", the imported lgraph must include input and output layers specified by the ONNX model or that you specify using the name-value argumentsInputDataFormats, OutputDataFormats, or OutputLayerType. - If you specify

TargetNetworkas"dlnetwork",importONNXLayersappends aCustomOutputLayerat the end of each output branch oflgraph, and might append aCustomInputLayerat the beginning of an input branch. The function appends aCustomInputLayerif the input data formats or input image sizes are not known. For network-specific information on the data formats of these layers, see the properties of theCustomInputLayerandCustomOutputLayerobjects. For information on how to interpret Deep Learning Toolbox input and output data formats, see Conversion of ONNX Input and Output Tensors into Built-In MATLAB Layers.

Example: TargetNetwork="dlnetwork" imports aLayerGraph object compatible with a dlnetwork object.

Data format of the network inputs, specified as a character vector, string scalar, or string array. importONNXLayers tries to interpret the input data formats from the ONNX file. The name-value argument InputDataFormats is useful when importONNXLayers cannot derive the input data formats.

Set InputDataFomats to a data format in the ordering of an ONNX input tensor. For example, if you specifyInputDataFormats as "BSSC", the imported network has one imageInputLayer input. For more information on howimportONNXLayers interprets the data format of ONNX input tensors and how to specify InputDataFormats for different Deep Learning Toolbox input layers, see Conversion of ONNX Input and Output Tensors into Built-In MATLAB Layers.

If you specify an empty data format ([] or ""),importONNXLayers automatically interprets the input data format.

Example: InputDataFormats='BSSC'

Example: InputDataFormats="BSSC"

Example: InputDataFormats=["BCSS","","BC"]

Example: InputDataFormats={'BCSS',[],'BC'}

Data Types: char | string | cell

Data format of the network outputs, specified as a character vector, string scalar, or string array. importONNXLayers tries to interpret the output data formats from the ONNX file. The name-value argument OutputDataFormats is useful when importONNXLayers cannot derive the output data formats.

Set OutputDataFormats to a data format in the ordering of an ONNX output tensor. For example, if you specifyOutputDataFormats as "BC", the imported network has one classificationLayer output. For more information on howimportONNXLayers interprets the data format of ONNX output tensors and how to specify OutputDataFormats for different Deep Learning Toolbox output layers, see Conversion of ONNX Input and Output Tensors into Built-In MATLAB Layers.

If you specify an empty data format ([] or ""),importONNXLayers automatically interprets the output data format.

Example: OutputDataFormats='BC'

Example: OutputDataFormats="BC"

Example: OutputDataFormats=["BCSS","","BC"]

Example: OutputDataFormats={'BCSS',[],'BC'}

Data Types: char | string | cell

Size of the input image for the first network input, specified as a vector of three or four numerical values corresponding to [height,width,channels] for 2-D images and [height,width,depth,channels] for 3-D images. The network uses this information only when the ONNX model in modelfile does not specify the input size.

Example: ImageInputSize=[28 28 1] for a 2-D grayscale input image

Example: ImageInputSize=[224 224 3] for a 2-D color input image

Example: ImageInputSize=[28 28 36 3] for a 3-D color input image

Layer type for the first network output, specified as"classification", "regression", or"pixelclassification". The functionimportONNXLayers appends a ClassificationOutputLayer, RegressionOutputLayer, or pixelClassificationLayer (Computer Vision Toolbox) object to the end of the first output branch of the imported network architecture. Appending a pixelClassificationLayer (Computer Vision Toolbox) object requires Computer Vision Toolbox™. If the ONNX model in modelfile specifies the output layer type or you specify TargetNetwork as "dlnetwork",importONNXLayers ignores the name-value argumentOutputLayerType.

Example: OutputLayerType="regression"

Constant folding optimization, specified as "deep","shallow", or "none". Constant folding optimizes the imported network architecture by computing operations on ONNX initializers (initial constant values) during the conversion of ONNX operators to equivalent built-in MATLAB layers.

If the ONNX network contains operators that the software cannot convert to equivalent built-in MATLAB layers (see ONNX Operators Supported for Conversion into Built-In MATLAB Layers), thenimportONNXLayers inserts a placeholder layer in place of each unsupported layer. For more information, see Tips.

Constant folding optimization can reduce the number of placeholder layers. When you set FoldConstants to "deep", the imported layers include the same or fewer placeholder layers, compared to when you set the argument to "shallow". However, the importing time might increase. Set FoldConstants to "none" to disable the network architecture optimization.

Example: FoldConstants="shallow"

Output Arguments

Network architecture of the pretrained ONNX model, returned as a LayerGraph object.

To use the imported layer graph for prediction, you must convert theLayerGraph object to a DAGNetwork ordlnetwork object. Specify the name-value argument TargetNetwork as"dagnetwork" or "dlnetwork" depending on the intended workflow.

- Convert a

LayerGraphto aDAGNetworkby usingassembleNetwork. On theDAGNetworkobject, you then predict class labels using theclassify function. - Convert a

LayerGraphto adlnetworkby usingdlnetwork. On thedlnetworkobject, you then predict class labels using thepredict function. Specify the input data as a dlarray using the correct data format (for more information, see the fmt argument ofdlarray).

Limitations

importONNXLayerssupports these:- ONNX intermediate representation version 9

- ONNX operator sets 6–20

Note

If you import an exported network, layers of the reimported network might differ from layers of the original network, and might not be supported.

More About

importONNXLayers supports these ONNX operators for conversion into built-in MATLAB layers, with some limitations.

* If importONNXLayers imports theConv ONNX operator as a convolution2dLayer object and theConv operator is a vector with only two elements[p1 p2], importONNXLayers sets thePadding option of convolution2dLayer to[p1 p2 p1 p2].

| ONNX Operator | ONNX Importer Custom Layer |

|---|---|

| Clip | nnet.onnx.layer.ClipLayer |

| Div | nnet.onnx.layer.ElementwiseAffineLayer |

| Flatten | nnet.onnx.layer.FlattenLayer ornnet.onnx.layer.Flatten3dLayer |

| ImageScaler | nnet.onnx.layer.ElementwiseAffineLayer |

| Reshape | nnet.onnx.layer.FlattenLayer |

| Sub | nnet.onnx.layer.ElementwiseAffineLayer |

importONNXLayers tries to interpret the data format of the ONNX network's input and output tensors, and then convert them into built-in MATLAB input and output layers. For details on the interpretation, see the tablesConversion of ONNX Input Tensors into Deep Learning Toolbox Layers and Conversion of ONNX Output Tensors into MATLAB Layers.

In Deep Learning Toolbox, each data format character must be one of these labels:

S— SpatialC— ChannelB— Batch observationsT— Time or sequenceU— Unspecified

Conversion of ONNX Input Tensors into Deep Learning Toolbox Layers

| Data Formats | Data Interpretation | Deep Learning Toolbox Layer | ||

|---|---|---|---|---|

| ONNX Input Tensor | MATLAB Input Format | Shape | Type | |

| BC | CB | _c_-by-n array, where c is the number of features and n is the number of observations | Features | featureInputLayer |

| BCSS, BSSC, CSS, SSC | SSCB | _h_-by-_w_-by-_c_-by-n numeric array, where h, w,c and n are the height, width, number of channels of the images, and number of observations, respectively | 2-D image | imageInputLayer |

| BCSSS, BSSSC, CSSS, SSSC | SSSCB | _h_-by-_w_-by-_d_-by-_c_-by-n numeric array, where h, w,d, c and n are the height, width, depth, number of channels of the images, and number of image observations, respectively | 3-D image | image3dInputLayer |

| TBC | CBT | c_-by-s_-by-n matrix, where_c is the number of features of the sequence,s is the sequence length, and_n is the number of sequence observations | Vector sequence | sequenceInputLayer |

| TBCSS | SSCBT | _h_-by-_w_-by-_c_-by-s_-by-n array, where h, w,c and n correspond to the height, width, and number of channels of the image, respectively,s is the sequence length, and_n is the number of image sequence observations | 2-D image sequence | sequenceInputLayer |

| TBCSSS | SSSCBT | _h_-by-_w_-by-_d_-by-_c_-by-s_-by-n array, where h, w,d, and c correspond to the height, width, depth, and number of channels of the image, respectively,s is the sequence length, and_n is the number of image sequence observations | 3-D image sequence | sequenceInputLayer |

Conversion of ONNX Output Tensors into MATLAB Layers

| Data Formats | MATLAB Layer | |

|---|---|---|

| ONNX Output Tensor | MATLAB Output Format | |

| BC, TBC | CB, CBT | classificationLayer |

| BCSS, BSSC, CSS, SSC, BCSSS, BSSSC, CSSS, SSSC | SSCB, SSSCB | pixelClassificationLayer (Computer Vision Toolbox) |

| TBCSS, TBCSSS | SSCBT, SSSCBT | regressionLayer |

You can use MATLAB Coder™ or GPU Coder™ together with Deep Learning Toolbox to generate MEX, standalone CPU, CUDA® MEX, or standalone CUDA code for an imported network. For more information, see Generate Code and Deploy Deep Neural Networks.

- Use MATLAB Coder with Deep Learning Toolbox to generate MEX or standalone CPU code that runs on desktop or embedded targets. You can deploy generated standalone code that uses the Intel® MKL-DNN library or the ARM® Compute library. Alternatively, you can generate generic C or C++ code that does not call third-party library functions. For more information, see Deep Learning with MATLAB Coder (MATLAB Coder).

- Use GPU Coder with Deep Learning Toolbox to generate CUDA MEX or standalone CUDA code that runs on desktop or embedded targets. You can deploy generated standalone CUDA code that uses the CUDA deep neural network library (cuDNN), the TensorRT™ high performance inference library, or the ARM Compute library for Mali GPU. For more information, see Deep Learning with GPU Coder (GPU Coder).

importONNXLayers returns the network architecturelgraph as a LayerGraph object. For code generation, you must first convert the imported LayerGraph object to a network. Convert a LayerGraph object to a DAGNetwork ordlnetwork object by using assembleNetwork or dlnetwork. For more information on MATLAB Coder and GPU Coder support for Deep Learning Toolbox objects, see Supported Classes (MATLAB Coder) and Supported Classes (GPU Coder), respectively.

You can generate code for any imported network whose layers support code generation. For lists of the layers that support code generation with MATLAB Coder and GPU Coder, see Supported Layers (MATLAB Coder) and Supported Layers (GPU Coder), respectively. For more information on the code generation capabilities and limitations of each built-in MATLAB layer, see the Extended Capabilities section of the layer. For example, seeCode Generation and GPU Code Generation of imageInputLayer.

importONNXLayers does not execute on a GPU. However,importONNXLayers imports the layers of a pretrained neural network for deep learning as a LayerGraph object, which you can use on a GPU.

- Convert the imported

LayerGraphobject to aDAGNetworkobject by using assembleNetwork. On theDAGNetworkobject, you can then predict class labels on either a CPU or GPU by using classify. Specify the hardware requirements using the name-value argumentExecutionEnvironment. For networks with multiple outputs, use the predict function and specify the name-value argument ReturnCategorical astrue. - Convert the imported

LayerGraphobject to adlnetworkobject by using dlnetwork. On thedlnetworkobject, you can then predict class labels on either a CPU or GPU by using predict. The functionpredictexecutes on the GPU if either the input data or network parameters are stored on the GPU.- If you use minibatchqueue to process and manage the mini-batches of input data, the

minibatchqueueobject converts the output to a GPU array by default if a GPU is available. - Use dlupdate to convert the learnable parameters of a

dlnetworkobject to GPU arrays.

net = dlupdate(@gpuArray,net)

- If you use minibatchqueue to process and manage the mini-batches of input data, the

- You can train the imported

LayerGraphobject on either a CPU or GPU by using the trainnet and trainNetwork functions. To specify training options, including options for the execution environment, use the trainingOptions function. Specify the hardware requirements using the name-value argumentExecutionEnvironment. For more information on how to accelerate training, see Scale Up Deep Learning in Parallel, on GPUs, and in the Cloud.

Using a GPU requires a Parallel Computing Toolbox™ license and a supported GPU device. For information about supported devices, seeGPU Computing Requirements (Parallel Computing Toolbox).

Tips

- If the imported network contains an ONNX operator not supported for conversion into a built-in MATLAB layer (see ONNX Operators Supported for Conversion into Built-In MATLAB Layers) and

importONNXLayersdoes not generate a custom layer, thenimportONNXLayersinserts a placeholder layer in place of the unsupported layer. To find the names and indices of the unsupported layers in the network, use thefindPlaceholderLayers function. You then can replace a placeholder layer with a new layer that you define. To replace a layer, use replaceLayer. - To use a pretrained network for prediction or transfer learning on new images, you must preprocess your images in the same way as the images that you use to train the imported model. The most common preprocessing steps are resizing images, subtracting image average values, and converting the images from BGR format to RGB format.

- To resize images, use imresize. For example,

imresize(image,[227 227 3]). - To convert images from RGB to BGR format, use flip. For example,

flip(image,3).

For more information about preprocessing images for training and prediction, see Preprocess Images for Deep Learning.

- To resize images, use imresize. For example,

- MATLAB uses one-based indexing, whereas Python® uses zero-based indexing. In other words, the first element in an array has an index of 1 and 0 in MATLAB and Python, respectively. For more information about MATLAB indexing, see Array Indexing. In MATLAB, to use an array of indices (

ind) created in Python, convert the array toind+1. - For more tips, see Tips on Importing Models from TensorFlow, PyTorch, and ONNX.

References

Version History

Introduced in R2018a

Starting in R2023b, the importONNXLayers function warns. Use importNetworkFromONNX instead. The importNetworkFromONNX function has these advantages over importONNXLayers:

- Imports an ONNX model into a dlnetwork object in a single step

- Provides a simplified workflow for importing models with unknown input and output information

- Has improved name-value arguments that you can use to more easily specify import options

ImportWeights has been removed. Starting in R2021b, the ONNX model weights are automatically imported. In most cases, you do not need to make any changes to your code.

- If

ImportWeightsis not set in your code,importONNXLayersnow imports the weights. - If

ImportWeightsis set totruein your code, the behavior ofimportONNXLayersremains the same. - If

ImportWeightsis set tofalsein your code,importONNXLayersnow ignores the name-value argumentImportWeightsand imports the weights.

If you import an ONNX model as a LayerGraph object compatible with aDAGNetwork object, the imported layer graph must include input and output layers. importONNXLayers tries to convert the input and output ONNX tensors into built-in MATLAB layers. When importing some networks, which importONNXLayers could previously import with input and output built-in MATLAB layers, importONNXLayers might now insert placeholder layers. In this case, do one of the following to update your code:

- Specify the name-value argument

TargetNetworkas"dlnetwork"to import the network as aLayerGraphobject compatible with adlnetworkobject. - Use the name-value arguments

InputDataFormats,OutputDataFormats, andOutputLayerTypeto specify the imported network's inputs and outputs. - Use importONNXFunction to import the network as a model function and an

ONNXParametersobject.

The layer names of an imported layer graph might differ from previous releases. To update your code, replace the existing name of a layer with the new name orlgraph.Layers(n).Name.