Dopamine in motivational control: rewarding, aversive, and alerting (original) (raw)

. Author manuscript; available in PMC: 2011 Dec 9.

SUMMARY

Midbrain dopamine neurons are well known for their strong responses to rewards and their critical role in positive motivation. It has become increasingly clear, however, that dopamine neurons also transmit signals related to salient but non-rewarding experiences such as aversive and alerting events. Here we review recent advances in understanding the reward and non-reward functions of dopamine. Based on this data, we propose that dopamine neurons come in multiple types that are connected with distinct brain networks and have distinct roles in motivational control. Some dopamine neurons encode motivational value, supporting brain networks for seeking, evaluation, and value learning. Others encode motivational salience, supporting brain networks for orienting, cognition, and general motivation. Both types of dopamine neurons are augmented by an alerting signal involved in rapid detection of potentially important sensory cues. We hypothesize that these dopaminergic pathways for value, salience, and alerting cooperate to support adaptive behavior.

Introduction

The neurotransmitter dopamine (DA) has a crucial role in motivational control – in learning what things in the world are good and bad, and in choosing actions to gain the good things and avoid the bad things. The major sources of DA in the cerebral cortex and in most subcortical areas are the DA-releasing neurons of the ventral midbrain, located in the substantia nigra pars compacta (SNc) and ventral tegmental area (VTA) (Bjorklund and Dunnett, 2007). These neurons transmit DA in two modes, ‘tonic’ and ‘phasic’ (Grace, 1991; Grace et al., 2007). In their tonic mode DA neurons maintain a steady, baseline level of DA in downstream neural structures that is vital for enabling the normal functions of neural circuits (Schultz, 2007). In their phasic mode DA neurons sharply increase or decrease their firing rates for 100–500 milliseconds, causing large changes in DA concentrations in downstream structures lasting for several seconds (Schultz, 1998; Schultz, 2007).

These phasic DA responses are triggered by many types of rewards and reward-related sensory cues (Schultz, 1998) and are ideally positioned to fulfill DA’s roles in motivational control, including its roles as a teaching signal that underlies reinforcement learning (Schultz et al., 1997; Wise, 2005) and as an incentive signal that promotes immediate reward seeking (Berridge and Robinson, 1998). As a result, these phasic DA reward signals have taken on a prominent role in theories about the functions of cortical and subcortical circuits and have become the subject of intense neuroscience research. In the first part of this review we will introduce the conventional theory of phasic DA reward signals and will review recent advances in understanding their nature and their control over neural processing and behavior.

In contrast to the accepted role of DA in reward processing, there has been considerable debate over the role of phasic DA activity in processing non-rewarding events. Some theories suggest that DA neuron phasic responses primarily encode reward-related events (Schultz, 1998; Ungless, 2004; Schultz, 2007), while others suggest that DA neurons transmit additional non-reward signals related to surprising, novel, salient, and even aversive experiences (Redgrave et al., 1999; Horvitz, 2000; Di Chiara, 2002; Joseph et al., 2003; Pezze and Feldon, 2004; Lisman and Grace, 2005; Redgrave and Gurney, 2006). In the second part of this review we will discuss a series of studies that have put these theories to the test and have revealed much about the nature of non-reward signals in DA neurons. In particular, these studies provide evidence that DA neurons are more diverse than previously thought. Rather than encoding a single homogeneous motivational signal, DA neurons come in multiple types that encode reward and non-reward events in different manners. This poses a problem for general theories which seek to identify dopamine with a single neural signal or motivational mechanism.

To remedy this dilemma, in the final part of this review we propose a new hypothesis to explain the presence of multiple types of DA neurons, the nature of their neural signals, and their integration into distinct brain networks for motivational control. Our basic proposal is as follows. One type of DA neurons encode motivational value, excited by rewarding events and inhibited by aversive events. These neurons support brain systems for seeking goals, evaluating outcomes, and value learning. A second type of DA neurons encode motivational salience, excited by both rewarding and aversive events. These neurons support brain systems for orienting, cognitive processing, and motivational drive. In addition to their value and salience-coding activity, both types of DA neurons also transmit an alerting signal, triggered by unexpected sensory cues of high potential importance. Together, we hypothesize that these value, salience, and alerting signals cooperate to coordinate downstream brain structures and control motivated behavior.

Dopamine in Reward: Conventional Theory

Dopamine in motivation of reward-seeking actions

Dopamine has long been known to be important for reinforcement and motivation of actions. Drugs that interfere with DA transmission interfere with reinforcement learning, while manipulations which enhance DA transmission, such as brain stimulation and addictive drugs, often acts as reinforcers (Wise, 2004). DA transmission is crucial for creating a state of motivation to seek rewards (Berridge and Robinson, 1998; Salamone et al., 2007) and for establishing memories of cue-reward associations (Dalley et al., 2005). DA release is not necessary for all forms of reward learning and may not always be ‘liked’ in the sense of causing pleasure, but it is critical for causing goals to become ‘wanted’ in the sense of motivating actions to achieve them (Berridge and Robinson, 1998; Palmiter, 2008).

One hypothesis about how dopamine supports reinforcement learning is that it adjusts the strength of synaptic connections between neurons. The most straightforward version of this hypothesis is that dopamine controls synaptic plasticity according to a modified Hebbian rule that can be roughly stated as “neurons that fire together wire together, as long as they get a burst of dopamine”. In other words, if cell A activates cell B, and cell B causes a behavioral action which results in a reward, then dopamine would be released and the A→B connection would be reinforced (Montague et al., 1996; Schultz, 1998). This mechanism would allow an organism to learn the optimal choice of actions to gain rewards, given sufficient trial-and-error experience. Consistent with this hypothesis, dopamine has a potent influence on synaptic plasticity in numerous brain regions (Surmeier et al., 2010; Goto et al., 2010; Molina-Luna et al., 2009; Marowsky et al., 2005; Lisman and Grace, 2005). In some cases dopamine enables synaptic plasticity along the lines of the Hebbian rule described above, in a manner that is correlated with reward-seeking behavior (Reynolds et al., 2001). In addition to its effects on long-term synaptic plasticity, dopamine can also exert immediate control over neural circuits by modulating neural spiking activity and synaptic connections between neurons (Surmeier et al., 2007; Robbins and Arnsten, 2009), in some cases doing so in a manner that would promote immediate reward-seeking actions (Frank, 2005).

Dopamine neuron reward signals

In order to motivate actions that lead to rewards, dopamine should be released during rewarding experiences. Indeed, most DA neurons are strongly activated by unexpected primary rewards such as food and water, often producing phasic ‘bursts’ of activity (Schultz, 1998) (phasic excitations including multiple spikes (Grace and Bunney, 1983)). However, the pioneering studies of Wolfram Schultz showed that these DA neuron responses are not triggered by reward consumption per se. Instead they resemble a ‘reward prediction error’, reporting the difference between the reward that is received and the reward that was predicted to occur (Schultz et al., 1997) (Figure 1A). Thus, if a reward is larger than predicted, DA neurons are strongly excited (positive prediction error, Figure 1E, red); if a reward is smaller than predicted or fails to occur at its appointed time, DA neurons are phasically inhibited (negative prediction error, Figure 1E, blue); and if a reward is cued in advance so that its size is fully predictable, DA neurons have little or no response (zero prediction error, Figure 1C, black). The same principle holds for DA responses to sensory cues that provide new information about future rewards. DA neurons are excited when a cue indicates an increase in future reward value (Figure 1C, red), inhibited when a cue indicates a decrease in future reward value (Figure 1C, blue), and generally have little response to cues that convey no new reward information (Figure 1E, black). These DA responses resemble a specific type of reward prediction error called the temporal difference error or “TD error”, which has been proposed to act as a reinforcement signal for learning the value of actions and environmental states (Houk et al., 1995; Montague et al., 1996; Schultz et al., 1997). Computational models using a TD-like reinforcement signal can explain many aspects of reinforcement learning in humans, animals, and DA neurons themselves (Sutton and Barto, 1981; Waelti et al., 2001; Montague and Berns, 2002; Dayan and Niv, 2008).

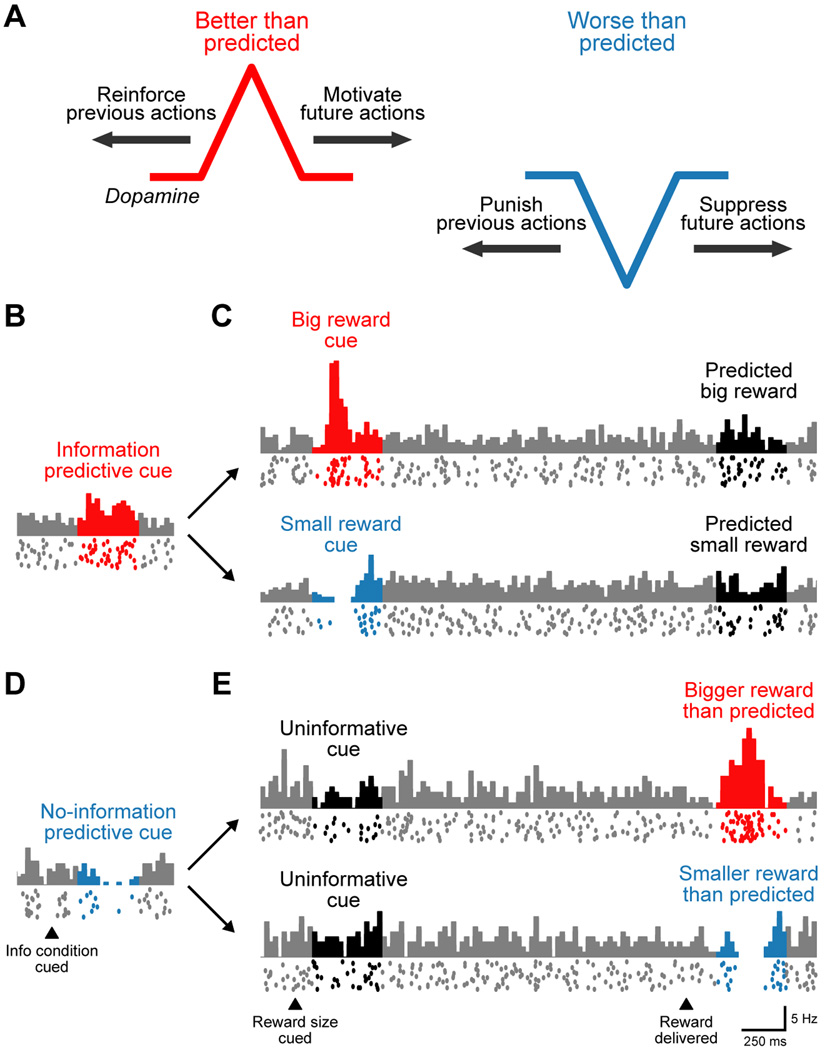

Figure 1. Dopamine coding of reward prediction errors and preference for predictive information.

(A) Conventional theories of DA reward signals. DA neurons encode a reward prediction error signal, responding with phasic excitation when a situation’s reward value becomes better than predicted (red) and phasic inhibition when the value becomes worse than expected (blue). These signals could be used for learning, to reinforce or punish previous actions (backward arrows) or for immediate control of behavior, to promote or suppress reward-seeking actions (forward arrows).

(B–E) An example DA neuron with conventional coding of reward prediction errors as well as coding of the subjective preference for predictive information. Each plot shows the neuron’s mean firing rate (histogram, top) and its spikes on 20 individual trials (bottom rasters) during each condition of the task. Data is from (Bromberg-Martin and Hikosaka, 2009).

(B) This DA neuron was excited by a cue indicating that an informative cue would appear to tell the size of a future reward (red).

(C) DA excitation by a big reward cue (red), inhibition by a small reward cue (blue), and no response to predictable reward outcomes (black).

(D) This DA neuron was inhibited by a cue indicating that an uninformative cue would appear which would leave the reward size unpredictable (blue).

(E) DA lack of response to uninformative cues (black), excitation by an unexpectedly big reward (red), and inhibition by an unexpectedly small reward (blue).

An impressive array of experiments have shown that DA signals represent reward predictions in a manner that closely matches behavioral preferences, including the preference for large rewards over small ones (Tobler et al., 2005) probable rewards over improbable ones (Fiorillo et al., 2003; Satoh et al., 2003; Morris et al., 2004) and immediate rewards over delayed ones (Roesch et al., 2007; Fiorillo et al., 2008; Kobayashi and Schultz, 2008). There is even evidence that DA neurons in humans encode the reward value of money (Zaghloul et al., 2009). Furthermore, DA signals emerge during learning with a similar timecourse to behavioral measures of reward prediction (Hollerman and Schultz, 1998; Satoh et al., 2003; Takikawa et al., 2004; Day et al., 2007) and are correlated with subjective measures of reward preference (Morris et al., 2006). These findings have established DA neurons as one of the best understood and most replicated examples of reward coding in the brain. As a result, recent studies have subjected DA neurons to intense scrutiny to discover how they generate reward predictions and how their signals act on downstream structures to control behavior.

Dopamine in Reward: Recent Advances

Dopamine neuron reward signals

Recent advances in understanding DA reward signals come from considering three broad questions: How do DA neurons learn reward predictions? How accurate are their predictions? And just what do they treat as rewarding?

How do DA neurons learn reward predictions? Classic theories suggest that reward predictions are learned through a gradual reinforcement process requiring repeated stimulus-reward pairings (Rescorla and Wagner, 1972; Montague et al., 1996). Each time stimulus A is followed by an unexpected reward, the estimated value of A is increased. Recent data, however, shows that DA neurons go beyond simple stimulus-reward learning and make predictions based on sophisticated beliefs about the structure of the world. DA neurons can predict rewards correctly even in unconventional environments where rewards paired with a stimulus cause a decrease in the value of that stimulus (Satoh et al., 2003; Nakahara et al., 2004; Bromberg-Martin et al., 2010c) or cause a change in the value of an entirely different stimulus (Bromberg-Martin et al., 2010b). DA neurons can also adapt their reward signals based on higher-order statistics of the reward distribution, such as scaling prediction error signals based on their expected variance (Tobler et al., 2005) and ‘spontaneously recovering’ their responses to extinguished reward cues (Pan et al., 2008). All of these phenomena form a remarkable parallel to similar effects seen in sensory and motor adaptation (Braun et al., 2010; Fairhall et al., 2001; Shadmehr et al., 2010), suggesting that they may reflect a general neural mechanism for predictive learning.

How accurate are DA reward predictions? Recent studies have shown that DA neurons faithfully adjust their reward signals to account for three sources of prediction uncertainty. First, humans and animals suffer from internal timing noise that prevents them from making reliable predictions about long cue-reward time intervals (Gallistel and Gibbon, 2000). Thus, if cue-reward delays are short (1–2 seconds) timing predictions are accurate and reward delivery triggers little DA response, but for longer cue-reward delays timing predictions become less reliable and rewards evoke clear DA bursts (Kobayashi and Schultz, 2008; Fiorillo et al., 2008). Second, many cues in everyday life are imprecise, specifying a broad distribution of reward delivery times. DA neurons again reflect this form of timing uncertainty: they are progressively inhibited during variable reward delays, as though signaling increasingly negative reward prediction errors at each moment the reward fails to appear (Fiorillo et al., 2008; Bromberg-Martin et al., 2010a; Nomoto et al., 2010). Finally, many cues are perceptually complex, requiring detailed inspection to reach a firm conclusion about their reward value. In such situations DA reward signals occur at long latencies and in a gradual fashion, appearing to reflect the gradual flow of perceptual information as the stimulus value is decoded (Nomoto et al., 2010).

Just what events do DA neurons treat as rewarding? Conventional theories of reward learning suggest that DA neurons assign value based on the expected amount of future primary reward (Montague et al., 1996). Yet even when the rate of primary reward is held constant, humans and animals often express an additional preference for predictability – seeking environments where each reward’s size, probability, and timing can be known in advance (Daly, 1992; Chew and Ho, 1994; Ahlbrecht and Weber, 1996). A recent study in monkeys found that DA neurons signal this preference (Bromberg-Martin and Hikosaka, 2009). Monkeys expressed a strong preference to view informative visual cues that would allow them to predict the size of a future reward, rather than uninformative cues that provided no new information. In parallel, DA neurons were excited by the opportunity to view the informative cues in a manner that was correlated with the animal’s behavioral preference (Figure 1B,D). This suggests that DA neurons not only motivate actions to gain rewards but also motivate actions to make accurate predictions about those rewards, in order to ensure that rewards can be properly anticipated and prepared for in advance.

Taken together, these findings show that DA reward prediction error signals are sensitive to sophisticated factors that inform human and animal reward predictions, including adaptation to high-order reward statistics, reward uncertainty, and preferences for predictive information.

Effects of phasic dopamine reward signals on downstream structures

DA reward responses occur in synchronous phasic bursts (Joshua et al., 2009b), a response pattern that shapes DA release in target structures (Gonon, 1988; Zhang et al., 2009; Tsai et al., 2009). It has long been theorized that these phasic bursts influence learning and motivation in a distinct manner from tonic DA activity (Grace, 1991; Grace et al., 2007; Schultz, 2007; Lapish et al., 2007). Recently developed technology has made it possible to confirm this hypothesis by controlling DA neuron activity with fine spatial and temporal precision. Optogenetic stimulation of VTA DA neurons induces a strong conditioned place preference which only occurs when stimulation is applied in a bursting pattern (Tsai et al., 2009). Conversely, genetic knockout of NMDA receptors from DA neurons, which impairs bursting while leaving tonic activity largely intact, causes a selective impairment in specific forms of reward learning (Zweifel et al., 2009; Parker et al., 2010) (although note that this knockout also impairs DA neuron synaptic plasticity (Zweifel et al., 2008)). DA bursts may enhance reward learning by reconfiguring local neural circuits. Notably, reward-predictive DA bursts are sent to specific regions of the nucleus accumbens, and these regions have especially high levels of reward-predictive neural activity (Cheer et al., 2007; Owesson-White et al., 2009).

Compared to phasic bursts, less is known about the importance of phasic pauses in spiking activity for negative reward prediction errors. These pauses cause smaller changes in spike rate, are less modulated by reward expectation (Bayer and Glimcher, 2005; Joshua et al., 2009a; Nomoto et al., 2010), and may have smaller effects on learning (Rutledge et al., 2009). However, certain types of negative prediction error learning require the VTA (Takahashi et al., 2009), suggesting that phasic pauses may still be decoded by downstream structures.

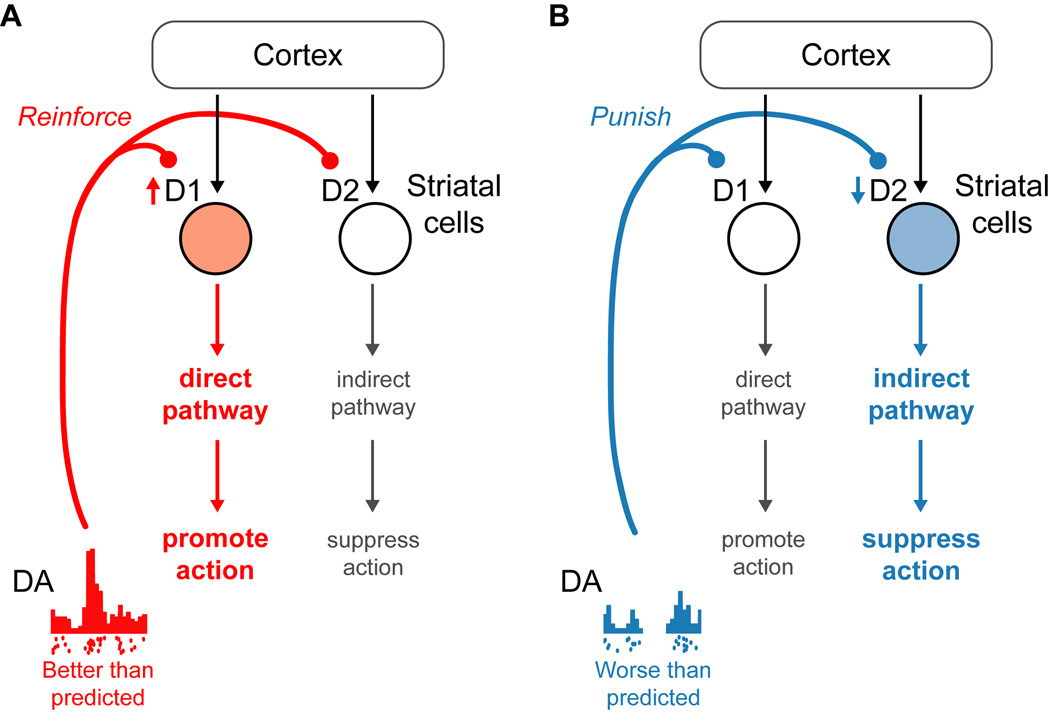

Since bursts and pauses cause very different patterns of DA release, they are likely to influence downstream structures through distinct mechanisms. There is recent evidence for this hypothesis in one major target of DA neurons, the dorsal striatum. Dorsal striatum projection neurons come in two types which express different DA receptors. One type expresses D1 receptors and projects to the basal ganglia ‘direct pathway’ to facilitate body movements; the second type expresses D2 receptors and projects to the ‘indirect pathway’ to suppress body movements (Figure 2) (Albin et al., 1989; Gerfen et al., 1990; Kravitz et al., 2010; Hikida et al., 2010). Based on the properties of these pathways and receptors, it has been theorized that DA bursts produce conditions of high DA, activate D1 receptors, and cause the direct pathway to select high-value movements (Figure 2A), whereas DA pauses produce conditions of low DA, inhibit D2 receptors, and cause the indirect pathway to suppress low-value movements (Figure 2B) (Frank, 2005; Hikosaka, 2007). Consistent with this hypothesis, high DA receptor activation promotes potentiation of cortico-striatal synapses onto the direct pathway (Shen et al., 2008) and learning from positive outcomes (Frank et al., 2004; Voon et al., 2010), while striatal D1 receptor blockade selectively impairs movements to rewarded targets (Nakamura and Hikosaka, 2006). In an analogous manner, low DA receptor activation promotes potentiation of cortico-striatal synapses onto the indirect pathway (Shen et al., 2008) and learning from negative outcomes (Frank et al., 2004; Voon et al., 2010), while striatal D2 receptor blockade selectively suppresses movements to non-rewarded targets (Nakamura and Hikosaka, 2006). This division of D1 and D2 receptor functions in motivational control explains many of the effects of DA-related genes on human behavior (Ullsperger, 2010; Frank and Fossella, 2010) and may extend beyond the dorsal striatum, as there is evidence for a similar division of labor in the ventral striatum (Grace et al., 2007; Lobo et al., 2010).

Figure 2. Dopamine control of positive and negative motivation in the dorsal striatum.

(A) If an action is followed by a new situation that is better than predicted, DA neurons fire a burst of spikes. This is thought to activate D1 receptors on direct pathway neurons, promoting immediate action as well as reinforcing cortico-striatal synapses to promote selection of that action in the future.

(B) If an action is followed by a new situation that is worse than predicted, DA neurons pause their spiking activity. This is thought to inhibit D2 receptors on indirect pathway neurons, promoting suppression of immediate action as well as reinforcing cortico-striatal synapses to promote suppression of that action in the future.

While the above scheme paints a simple picture of phasic DA control of behavior through its effects on the striatum, the full picture is much more complex. DA influences reward-related behavior by acting on many brain regions including the prefrontal cortex (Hitchcott et al., 2007), rhinal cortex (Liu et al., 2004), hippocampus (Packard and White, 1991; Grecksch and Matties, 1981) and amygdala (Phillips et al., 2010). The effects of DA are likely to differ widely between these regions due to variations in the density of DA innervation, DA transporters, metabolic enzymes, autoreceptors, receptors, and receptor coupling to intracellular signaling pathways (Neve et al., 2004; Bentivoglio and Morelli, 2005; Frank and Fossella, 2010). Furthermore, at least in the VTA, DA neurons can have different cellular properties depending on their projection targets (Lammel et al., 2008; Margolis et al., 2008), and some have the remarkable ability to transmit glutamate as well as dopamine (Descarries et al., 2008; Chuhma et al., 2009; Hnasko et al., 2010; Tecuapetla et al., 2010; Stuber et al., 2010; Birgner et al., 2010). Thus, the full extent of DA neuron control over neural processing is only beginning to be revealed.

Dopamine: Beyond Reward

Thus far we have discussed the role of DA neurons in reward-related behavior, founded upon dopamine responses resembling reward prediction errors. It has become increasingly clear, however, that DA neurons phasically respond to several types of events that are not intrinsically rewarding and are not cues to future rewards, and that these non-reward signals have an important role in motivational processing. These non-reward events can be grouped into two broad categories, aversive and alerting, which we will discuss in detail below. Aversive events include intrinsically undesirable stimuli (such as air puffs, bitter tastes, electrical shocks, and other unpleasant sensations) and sensory cues that have gained aversive properties through association with these events. Alerting events are unexpected sensory cues of high potential importance, which generally trigger immediate reactions to determine their meaning.

Diverse dopamine responses to aversive events

A neuron’s response to aversive events provides a crucial test of its functions in motivational control (Schultz, 1998; Berridge and Robinson, 1998; Redgrave et al., 1999; Horvitz, 2000; Joseph et al., 2003). In many respects we treat rewarding and aversive events in opposite manners, reflecting their opposite motivational value. We seek rewards and assign them positive value, while we avoid aversive events and assign them negative value. In other respects we treat rewarding and aversive events in similar manners, reflecting their similar motivational salience [FOOTNOTE1]. Both rewarding and aversive events trigger orienting of attention, cognitive processing, and increases in general motivation.

Which of these functions do DA neurons support? It has long been known that stressful and aversive experiences cause large changes in DA concentrations in downstream brain structures, and that behavioral reactions to these experiences are dramatically altered by DA agonists, antagonists, and lesions (Salamone, 1994; Di Chiara, 2002; Pezze and Feldon, 2004; Young et al., 2005). These studies have produced a striking diversity of results, however (Levita et al., 2002; Di Chiara, 2002; Young et al., 2005). Many studies are consistent with DA neurons encoding motivational salience. They report that aversive events increase DA levels and that behavioral aversion is supported by high levels of DA transmission (Salamone, 1994; Joseph et al., 2003; Ventura et al., 2007; Barr et al., 2009; Fadok et al., 2009) including phasic DA bursts (Zweifel et al., 2009). But other studies are more consistent with DA neurons encoding motivational value. They report that aversive events reduce DA levels and that behavioral aversion is supported by low levels of DA transmission (Mark et al., 1991; Shippenberg et al., 1991; Liu et al., 2008; Roitman et al., 2008). In many cases these mixed results have been found in single studies, indicating that aversive experiences cause different patterns of DA release in different brain structures (Thierry et al., 1976; Besson and Louilot, 1995; Ventura et al., 2001; Jeanblanc et al., 2002; Bassareo et al., 2002; Pascucci et al., 2007), and that DA-related drugs can produce a mixture of neural and behavioral effects similar to those caused by both rewarding and aversive experiences (Ettenberg, 2004; Wheeler et al., 2008).

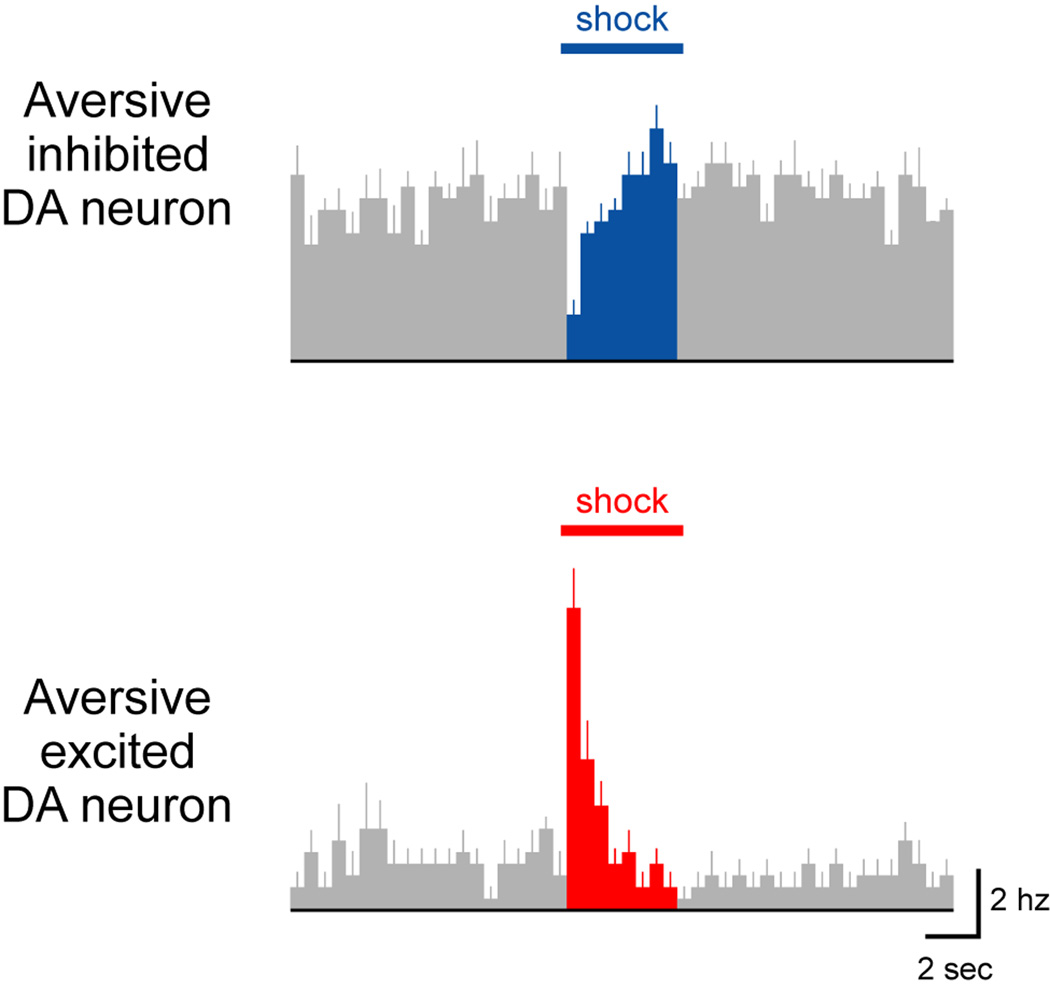

This diversity of DA release patterns and functions is difficult to reconcile with the idea that DA neurons transmit a uniform motivational signal to all brain structures. These diverse responses could be explained, however, if DA neurons are themselves diverse – composed of multiple neural populations that support different aspects of aversive processing. This view is supported by neural recording studies in anesthetized animals. These studies have shown that noxious stimuli evoke excitation in some DA neurons but inhibition in other DA neurons (Chiodo et al., 1980; Maeda and Mogenson, 1982; Schultz and Romo, 1987; Mantz et al., 1989; Gao et al., 1990; Coizet et al., 2006). Importantly, both excitatory and inhibitory responses occur in neurons confirmed to be dopaminergic using juxtacellular labeling (Brischoux et al., 2009) (Figure 3). A similar diversity of aversive responses occurs during active behavior. Different groups of DA neurons are phasically excited or inhibited by aversive events including noxious stimulation of the skin (Kiyatkin, 1988a; Kiyatkin, 1988b), sensory cues predicting aversive shocks (Guarraci and Kapp, 1999), aversive airpuffs (Matsumoto and Hikosaka, 2009b), and sensory cues predicting aversive airpuffs (Matsumoto and Hikosaka, 2009b; Joshua et al., 2009a). Furthermore, when two DA neurons are recorded simultaneously, their aversive responses generally have little trial-to-trial correlation with each other (Joshua et al., 2009b), suggesting that aversive responses are not coordinated across the DA population as a whole.

Figure 3. Diverse dopamine neuron responses to aversive events.

Two example DA neurons in the VTA that were phasically inhibited (top) or excited (bottom) by noxious footshocks. These neurons were recorded in anesthetized rats and were confirmed to be dopaminergic using juxtacellular labeling. Adapted from (Brischoux et al., 2009).

To understand the functions of these diverse aversive responses, we need to know how they are combined with reward responses to generate a meaningful motivational signal. A recent study investigated this topic and revealed that DA neurons are divided into multiple populations with distinct motivational signals (Matsumoto and Hikosaka, 2009b). One population is excited by rewarding events and inhibited by aversive events, as though encoding motivational value (Figure 4A). A second population is excited by both rewarding and aversive events in similar manners, as though encoding motivational salience (Figure 4B). In both of these populations many neurons are sensitive to reward and aversive predictions: they respond when rewarding events are more rewarding than predicted and when aversive events are more aversive than predicted (Matsumoto and Hikosaka, 2009b). This shows that their aversive responses are truly caused by predictions about aversive events, ruling out the possibility that they could be caused by non-specific factors such as raw sensory input or generalized associations with reward (Schultz, 2010). These two populations differ, however, in the detailed nature of their predictive code. Motivational value coding DA neurons encode an accurate prediction error signal, including strong inhibition by omission of rewards and mild excitation by omission of aversive events (Figure 4A, right). In contrast, motivational salience coding DA neurons respond when salient events are present but not when they are absent (Figure 4B, right), consistent with theoretical notions of arousal (Lang and Davis, 2006) [FOOTNOTE2]. Evidence for these two DA neuron populations has been observed even when neural activity has been examined in an averaged manner. Thus, studies targeting different parts of the DA system found phasic DA signals encoding aversive events with inhibition (Roitman et al., 2008), similar to coding of motivational value, or with excitation (Joshua et al., 2008; Anstrom et al., 2009), similar to coding of motivational salience.

Figure 4. Distinct dopamine neuron populations encoding motivational value and salience.

(A) Motivational value coding DA neurons are excited by reward cues and reward outcomes (fruit juice) and inhibited by aversive cues and aversive outcomes (airpuffs).

(B) Motivational salience coding DA neurons are excited by both reward and aversive cues and outcomes. Analysis and classification of neurons adapted from (Bromberg-Martin et al., 2010a); original data from (Matsumoto and Hikosaka, 2009b).

These recent findings might appear to contradict an early report that DA neurons respond preferentially to reward cues rather than aversive cues (Mirenowicz and Schultz, 1996). When examined closely, however, even that study is fully consistent with DA value and salience coding. In that study reward cues led to reward outcomes with high probability (>90%) while aversive cues led to aversive outcomes with low probability (<10%). Hence value and salience-coding DA neurons would have little response to the aversive cues, accurately encoding their low level of aversiveness.

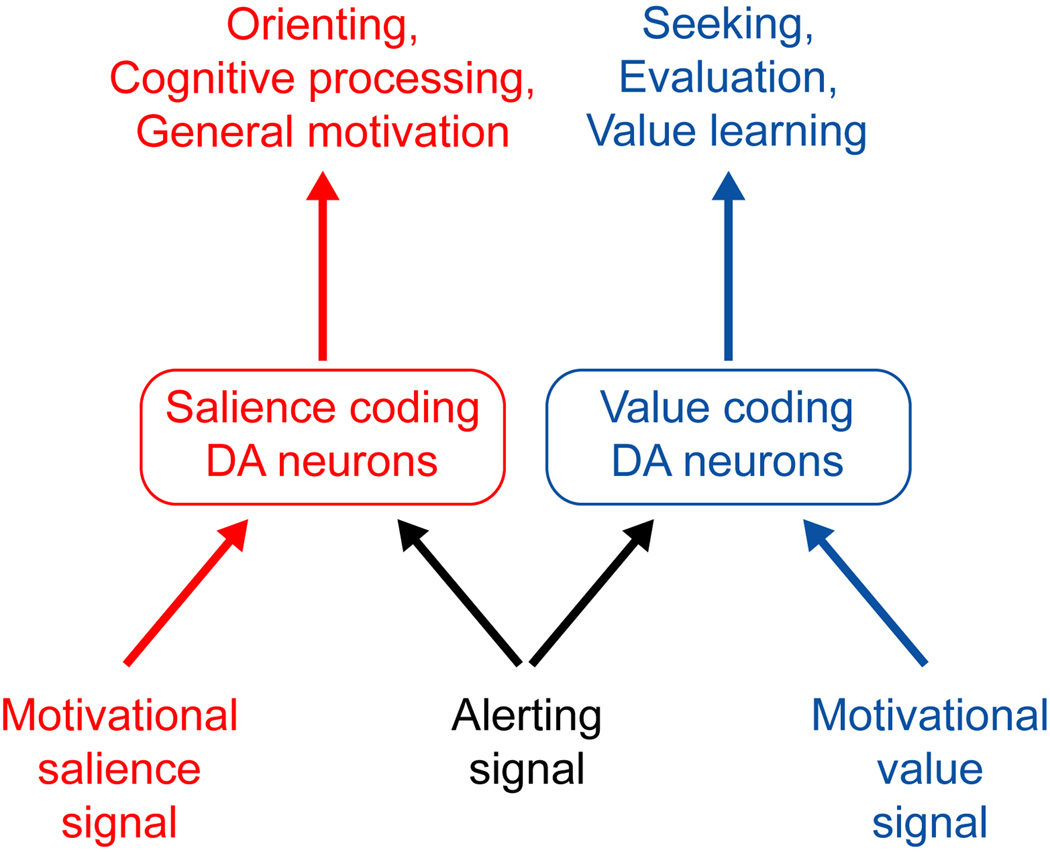

Functional role of motivational value and salience signals

Taken together, the above findings indicate that DA neurons are divided into multiple populations suitable for distinct roles in motivational control. Motivational value coding DA neurons fit well with current theories of dopamine neurons and reward processing (Schultz et al., 1997; Berridge and Robinson, 1998; Wise, 2004). These neurons encode a complete prediction error signal and encode rewarding and aversive events in opposite directions. Thus these neurons provide an appropriate instructive signal for seeking, evaluation, and value learning (Figure 5). If a stimulus causes value coding DA neurons to be excited then we should approach it, assign it high value, and learn actions to seek it again in the future. If a stimulus causes value coding DA neurons to be inhibited then we should avoid it, assign it low value, and learn actions to avoid it again in the future.

Figure 5. Hypothesized functions of motivational value, salience, and alerting signals.

Hypothesized functions of motivational signals in DA neurons. Motivational value signals are sent to value coding DA neurons which instruct seeking of rewards, evaluation of outcomes, and value learning. Motivational salience signals are sent to salience coding DA neurons which support attentional orienting, cognitive processing, and general motivation. Alerting signals are sent to both populations. In value coding DA neurons they promote seeking of environments where alert cues are available so that salient outcomes can be anticipated in advance. In salience coding DA neurons they implement this anticipation by promoting orienting to alert cues and deployment of cognitive and motivational resources.

In contrast, motivational salience coding DA neurons fit well with theories of dopamine neurons and processing of salient events (Redgrave et al., 1999; Horvitz, 2000; Joseph et al., 2003; Kapur, 2003). These neurons are excited by both rewarding and aversive events and have weaker responses to neutral events, providing an appropriate instructive signal for neural circuitry to learn to detect, predict, and respond to situations of high importance. Here we will consider three such brain systems (Figure 5). First, neural circuits for visual and attentional orienting are calibrated to discover information about all types of events, both rewarding and aversive. For instance, both reward and aversive cues attract orienting reactions more effectively than neutral cues (Lang and Davis, 2006; Matsumoto and Hikosaka, 2009b; Austin and Duka, 2010). Second, both rewarding and aversive situations engage neural systems for cognitive control and action selection - we need to engage working memory to hold information in mind, conflict resolution to decide upon a course of action, and long-term memory to remember the resulting outcome (Bradley et al., 1992; Botvinick et al., 2001; Savine et al., 2010). Third, both rewarding and aversive situations require an increase in general motivation to energize actions and to ensure that they are executed properly. Indeed, DA neurons are critical in motivating effort to achieve high-value goals and in translating knowledge of task demands into reliable motor performance (Berridge and Robinson, 1998; Mazzoni et al., 2007; Niv et al., 2007; Salamone et al., 2007).

Dopamine excitation by alerting sensory cues

In addition to their signals encoding motivational value and salience, the majority of DA neurons also have burst responses to several types of sensory events that are not directly associated with rewarding or aversive experiences. These responses have been theorized to depend on a number of neural and psychological factors, including direct sensory input, surprise, novelty, arousal, attention, salience, generalization, and pseudo-conditioning (Schultz, 1998; Redgrave et al., 1999; Horvitz, 2000; Lisman and Grace, 2005; Redgrave and Gurney, 2006; Joshua et al., 2009a; Schultz, 2010).

Here we will attempt to synthesize these ideas and account for these DA responses in terms of a single underlying signal, an alerting signal (Figure 5). The term ‘alerting’ was used by Schultz (Schultz, 1998) as a general term for events that attract attention. Here we will use it in a more specific sense. By an alerting event, we mean an unexpected sensory cue that captures attention based on a rapid assessment of its potential importance, using simple features such as its location, size, and sensory modality. Such alerting events often trigger immediate behavioral reactions to investigate them and determine their precise meaning. Thus DA alerting signals typically occur at short latencies, are based on the rough features of a stimulus, and are best correlated with immediate reactions such as orienting reactions (Schultz and Romo, 1990; Joshua et al., 2009a; Schultz, 2010). This is in contrast to other motivational signals in DA neurons which typically occur at longer latencies, take into account the precise identity of the stimulus, and are best correlated with considered behavioral actions such as decisions to approach or avoid (Schultz and Romo, 1990; Joshua et al., 2009a; Schultz, 2010).

DA alerting responses can be triggered by surprising sensory events such as unexpected light flashes and auditory clicks, which evoke prominent burst excitations in 60–90% of DA neurons throughout the SNc and VTA (Strecker and Jacobs, 1985; Horvitz et al., 1997; Horvitz, 2000) (Figure 6A). These alerting responses seem to reflect the degree to which the stimulus is surprising and captures attention; they are reduced if a stimulus occurs at predictable times, if attention is engaged elsewhere, or during sleep (Schultz, 1998; Takikawa et al., 2004; Strecker and Jacobs, 1985; Steinfels et al., 1983). For instance, an unexpected clicking sound evokes a prominent DA burst when a cat is in a passive state of quiet waking, but has no effect when the cat is engaged in attention-demanding activities such as hunting a rat, feeding, grooming, being petted by the experimenter, and so on (Strecker and Jacobs, 1985) (Figure 6A). Similarly, DA burst responses are triggered by sensory events that are physically weak but are alerting due to their novelty (Ljungberg et al., 1992; Schultz, 1998). These responses habituate as the novel stimulus becomes familiar, in parallel with the habituation of orienting reactions (Figure 6B). Consistent with these findings, surprising and novel events evoke DA release in downstream structures (Lisman and Grace, 2005) and activate DA-related brain circuits in a manner that shapes reward processing (Zink et al., 2003; Davidson et al., 2004; Duzel et al., 2010).

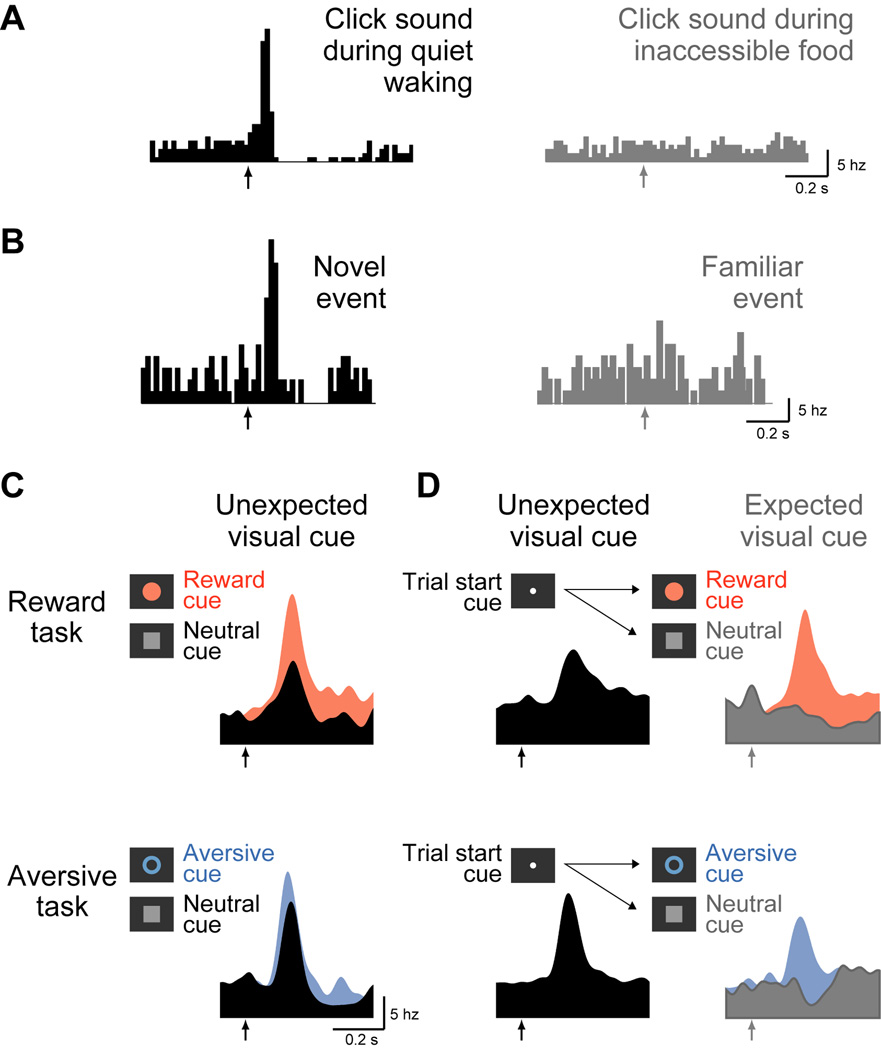

Figure 6. Dopamine neuron excitatory responses to alerting events.

DA neurons are excited by sensory cues that are alerting (left, black) but do not respond when the same cues are rendered non-alerting (right, gray).

(A) A DA neuron bursts in response to an unexpected 113 dB auditory click. These bursts occur when the cat is in a state of quiet waking, but not when the cat is preoccupied by the presence of inaccessible food. Adapted from (Strecker and Jacobs, 1985).

(B) A DA neuron bursts in response to a new sensory stimulus (a door opening). These bursts occur when it is relatively novel (presentations 12–33) but not when it is familiar (presentations 56–75). Histograms built using data from a neuron reported in (Ljungberg et al., 1992).

(C,D) DA neurons that are excited by unexpected visual cues during tasks when the cues are potentially rewarding or aversive. In separate blocks of trials, animals were presented with cues and outcomes that were potentially rewarding (top, reward task) or aversive (bottom, aversive task). Data is the averaged activity of four motivational salience coding DA neurons; for clarity, stimulus colors have been modified and only a subset of conditions are shown. Adapted from (Bromberg-Martin et al., 2010a).

(C) In a first experiment, motivational cues are presented with unpredictable timing. The DA neurons are excited by all cues, even a neutral cue (black curve) that had never been paired with rewarding or aversive outcomes.

(D) In a second experiment, the timing of motivational cues is made fully predictable by presenting a “trial start cue” one second in advance. The DA neurons are no longer excited by the neutral cue (right, gray); instead, their excitation shifts to the trial start cue (left, black).

DA alerting responses are also triggered by unexpected sensory cues that have the potential to provide new information about motivationally salient events. As expected for a short-latency alerting signal, these responses are rather non-selective: they are triggered by any stimulus that merely resembles a motivationally salient cue, even if the resemblance is very slight (a phenomenon called generalization) (Schultz, 1998). As a result, DA neurons often respond to a stimulus with a mixture of two signals: a fast alerting signal encoding the fact that the stimulus is potentially important, and a second signal encoding its actual rewarding or aversive meaning (Schultz and Romo, 1990; Waelti et al., 2001; Tobler et al., 2003; Day et al., 2007; Kobayashi and Schultz, 2008; Fiorillo et al., 2008; Nomoto et al., 2010) (see (Kakade and Dayan, 2002; Joshua et al., 2009a; Schultz, 2010) for review). An example can be seen in a set of motivational salience coding DA neurons shown in Figure 6C (Bromberg-Martin et al., 2010a). These neurons were excited by reward and aversive cues, but they were also excited by a neutral cue. The neutral cue had never been paired with motivational outcomes, but did have a (very slight) physical resemblance to the reward and aversive cues.

These alerting responses seem closely tied to a sensory cue’s ability to trigger orienting reactions to examine it further and discover its meaning. This can be seen in three notable properties. First, alerting responses only occur for sensory cues that have to be examined to determine their meaning, not for intrinsically rewarding or aversive events such as delivery of juice or airpuffs (Schultz, 2010). Second, alerting responses only occur when a cue is potentially important and has the ability to trigger orienting reactions, not when the cue is irrelevant to the task at hand and fails to trigger orienting reactions (Schultz and Romo, 1990). Third, alerting responses are enhanced in situations when cues would trigger an abrupt shift of attention – when they appear at an unexpected time or away from the center of gaze (Bromberg-Martin et al., 2010a). Thus when motivational cues are presented with unpredictable timing they trigger immediate orienting reactions and a generalized DA alerting response – excitation by all cues including neutral cues (Figure 6C, black). But if their timing is made predictable – for example, by forewarning the subjects with a “trial start cue” presented one second before the cues appear – the cues no longer evoke an alerting response (Figure 6D, gray). Instead, the alerting response shifts to the trial start cue – the first event of the trial that has unpredictable timing and evokes orienting reactions (Figure 6D, black).

What is the underlying mechanism that generates DA neuron alerting signals? One hypothesis is that alerting responses are simply conventional reward prediction error signals that occur at short latencies, encoding the expected reward value of a stimulus before it has been fully discriminated (Kakade and Dayan, 2002). More recent evidence, however, suggests that alerting signals can be generated by a distinct mechanism from conventional DA reward signals (Satoh et al., 2003; Bayer and Glimcher, 2005; Bromberg-Martin et al., 2010a; Bromberg-Martin et al., 2010c; Nomoto et al., 2010). Most strikingly, the alerting response to the trial start cue is not restricted to rewarding tasks; it can have equal strength during an aversive task in which no rewards are delivered (Figure 6C,D, bottom, “aversive task”). This occurs even though conventional DA reward signals in the same neurons correctly signal that the rewarding task has a much higher expected value than the aversive task (Bromberg-Martin et al., 2010a). These alerting signals are not purely a form of value coding or purely a form of salience coding, because they occur in the majority of both motivational value and salience coding DA neurons (Bromberg-Martin et al., 2010a). A second dissociation can be seen in the way that DA neurons predict future rewards based on the memory of past reward outcomes (Satoh et al., 2003; Bayer and Glimcher, 2005). Whereas conventional DA reward signals are controlled by a long-timescale memory trace optimized for accurate reward prediction, alerting responses to the trial start cue are controlled by a separate memory trace resembling that seen in immediate orienting reactions (Bromberg-Martin et al., 2010c). A third dissociation can be seen in the way that these signals are distributed across the DA neuron population. Whereas conventional DA reward signals are strongest in the ventromedial SNc, alerting responses to the trial start cue (and to other unexpectedly timed cues) are broadcast throughout the SNc (Nomoto et al., 2010).

In contrast to these dissociations from conventional reward signals, DA alerting signals are correlated with the speed of orienting and approach responses to the alerting event (Satoh et al., 2003; Bromberg-Martin et al., 2010a; Bromberg-Martin et al., 2010c). This suggests that alerting signals are generated by a neural process that motivates fast reactions to investigate potentially important events. At the present time, unfortunately, relatively little is known about precisely what events this process treats as ‘important’. For example, are alerting responses equally sensitive to rewarding and aversive events? Alerting responses are known to occur for stimuli that resemble reward cues or that resemble both reward and aversive cues (e.g. by sharing the same sensory modality). But it is not yet known whether alerting responses occur for stimuli that solely resemble aversive cues.

Functional role of dopamine alerting signals

As we have seen, alerting signals are likely to be generated by a distinct mechanism from motivational value and salience signals. However, alerting signals are sent to both motivational value and salience coding DA neurons, and therefore are likely to regulate brain processing and behavior in a similar manner to value and salience signals (Figure 5).

Alerting signals sent to motivational salience coding DA neurons would support orienting of attention to the alerting stimulus, engagement of cognitive resources to discover its meaning and decide on a plan for action, and increase motivation levels to implement this plan efficiently (Figure 5). These effects could occur through immediate effects on neural processing or by reinforcing actions which led to detection of the alerting event. This functional role fits well with the correlation between DA alerting responses and fast behavioral reactions to the alerting stimulus, and with theories that short-latency DA neuron responses are involved in orienting of attention, arousal, enhancement of cognitive processing, and immediate behavioral reactions (Redgrave et al., 1999; Horvitz, 2000; Joseph et al., 2003; Lisman and Grace, 2005; Redgrave and Gurney, 2006; Joshua et al., 2009a).

The presence of alerting signals in motivational value coding DA neurons is more difficult to explain. These neurons transmit motivational value signals that are ideal for seeking, evaluation of outcomes, and value learning; yet they can also be excited by alerting events such as unexpected clicking sounds and the onset of aversive trials. According to our hypothesized pathway (Figure 5), this would cause alerting events to be assigned positive value and to be sought after in a manner similar to rewards! While surprising at first glance, there is reason to suspect that alerting events can be treated as positive goals. Alerting signals provide the first warning that a potentially important event is about to occur, and hence provide the first opportunity to take action to control that event. If alerting cues are available, motivationally salient events can be detected, predicted, and prepared for in advance; if alerting cues are absent, motivationally salient events always occur as an unexpected surprise. Indeed, humans and animals often express a preference for environments where rewarding, aversive, and even motivationally neutral sensory events can be observed and predicted in advance (Badia et al., 1979; Herry et al., 2007; Daly, 1992; Chew and Ho, 1994) and many DA neurons signal the behavioral preference to view reward-predictive information (Bromberg-Martin and Hikosaka, 2009). DA alerting signals may support these preferences by assigning positive value to environments where potentially important sensory cues can be anticipated in advance.

Neural pathways for motivational value, salience, and alerting

Thus far we have divided DA neurons into two types which encode motivational value and motivational salience and are suitable for distinct roles in motivational control (Figure 5). How does this conceptual scheme map onto neural pathways in the brain? Here we propose a hypothesis about the anatomical locations of these neurons, their projections to downstream structures, and the sources of their motivational signals (Figures 6,7).

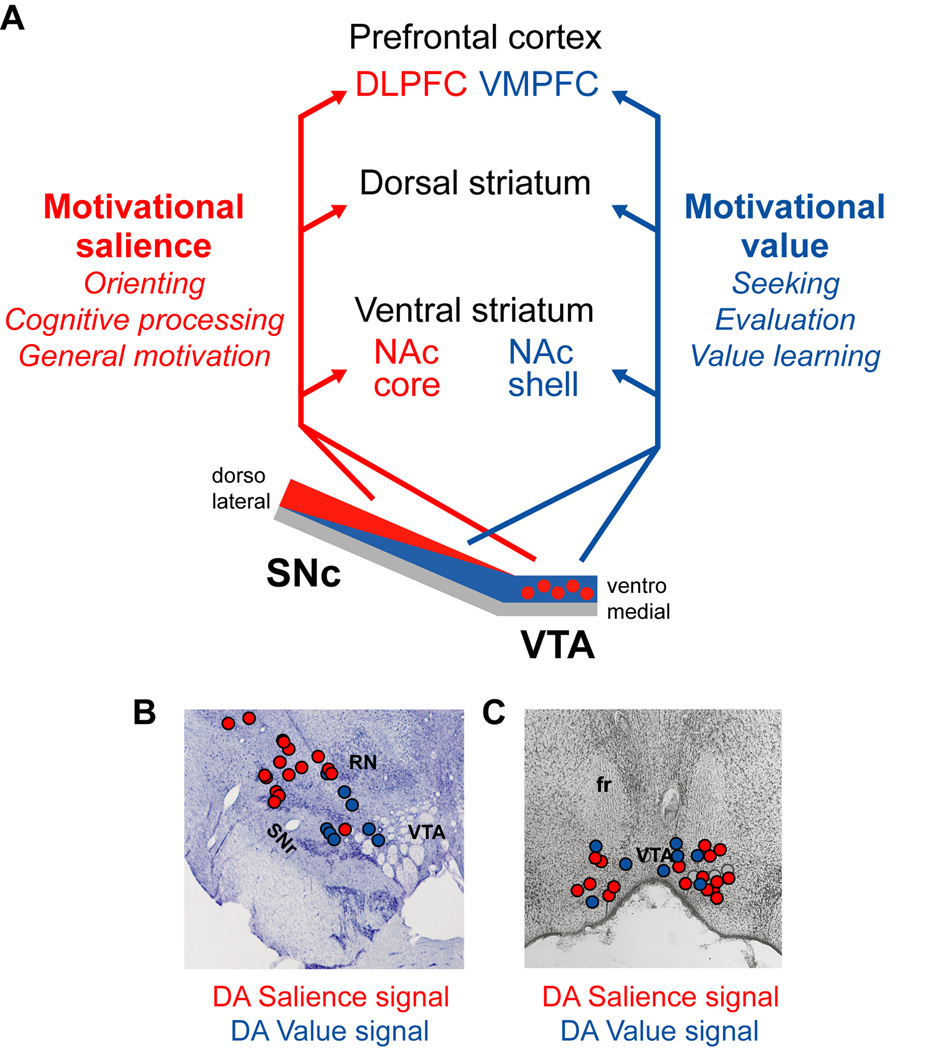

Figure 7. Hypothesized anatomical location and projections of dopamine motivational value and salience coding neurons.

(A) In our hypothesis, motivational salience coding DA neurons are located predominantly in the dorsolateral SNc and medial VTA. They may send signals to regions of the nucleus accumbens core (NAc core), dorsal striatum, and dorsal and lateral prefrontal cortex (DLPFC). Motivational value coding DA neurons are located predominantly in the ventromedial SNc and throughout the VTA. They may send signals to regions of the nucleus accumbens shell (NAc shell), dorsal striatum, and ventromedial prefrontal cortex (VMPFC).

(B) DA excitatory responses to aversive cues (red dots) often occur in the dorsolateral SNc, while inhibitory responses (blue dots) often occur in the ventromedial SNc. Data are from one monkey and collapsed across three adjacent 1 mm sections. Also labeled are the substantia nigra pars reticulata (SNr) and red nucleus (RN). Adapted from (Matsumoto and Hikosaka, 2009b).

(C) DA neurons with greater excitation (red dots) or inhibition (blue dots) to aversive cues than neutral cues are mixed within the medial VTA. Also shown are neurons that had greater responses to neutral cues than aversive cues (gray dots). Also labeled is the fasciculus retroflexus (fr). Data are from eight rabbits and collapsed across three adjacent sections. Adapted from (Guarraci and Kapp, 1999).

Anatomical locations of value and salience coding neurons

A recent study mapped the locations of DA reward and aversive signals in the lateral midbrain including the SNc and lateralmost part of the VTA (Matsumoto and Hikosaka, 2009b). Motivational value and motivational salience signals were distributed across this region in an anatomical gradient. Motivational value signals were found more commonly in neurons in the ventromedial SNc and lateral VTA, while motivational salience signals were found more commonly in neurons in the dorsolateral SNc (Figure 7B). This is consistent with reports that DA reward value coding is strongest in the ventromedial SNc (Nomoto et al., 2010) while aversive excitations tend to be strongest more laterally (Mirenowicz and Schultz, 1996). Other studies have explored the more medial midbrain. These studies found a mixture of excitatory and inhibitory aversive responses with no significant difference in their locations, although with a trend for aversive excitations to be located more ventrally (Guarraci and Kapp, 1999; Brischoux et al., 2009) (Figure 7C).

Destinations of motivational value signals

According to our hypothesis, motivational value coding DA neurons should project to brain regions involved in approach and avoidance actions, evaluation of outcomes, and value learning (Figure 5). Indeed, the ventromedial SNc and VTA project to the ventromedial prefrontal cortex (Williams and Goldman-Rakic, 1998) including the orbitofrontal cortex (OFC) (Porrino and Goldman-Rakic, 1982) (Figure 7A). The OFC has been consistently implicated in value coding in functional imaging studies (Anderson et al., 2003; Small et al., 2003; Jensen et al., 2007; Litt et al., 2010) and single neuron recordings (Morrison and Salzman, 2009; Roesch and Olson, 2004). The OFC is thought to evaluate choice options (Padoa-Schioppa, 2007; Kable and Glimcher, 2009), encode outcome expectations (Schoenbaum et al., 2009), and update these expectations during learning (Walton et al., 2010). Furthermore, the OFC is involved in learning from negative reward prediction errors (Takahashi et al., 2009) which are strongest in value-coding DA neurons (Figure 4).

In addition, the medial portions of the dopaminergic midbrain project to the ventral striatum including the nucleus accumbens shell (NAc shell) (Haber et al., 2000) (Figure 7A). A recent study demonstrated that the NAc shell receives phasic DA signals encoding the motivational value of taste outcomes (Roitman et al., 2008). These signals are likely to cause value learning because direct infusion of DA drugs into the NAc shell is strongly reinforcing (Ikemoto, 2010) while treatments that reduce DA input to the shell can induce aversions (Liu et al., 2008). One caveat is that studies of NAc shell DA release over long timescales (minutes) have produced mixed results, some consistent with value coding and others with salience coding (e.g. (Bassareo et al., 2002; Ventura et al., 2007)). This suggests that value signals may be restricted to specific locations within the NAc shell. Notably, different regions of the NAc shell are specialized for controlling appetitive and aversive behavior (Reynolds and Berridge, 2002), which both require input from DA neurons (Faure et al., 2008).

Finally, DA neurons throughout the extent of the SNc send heavy projections to the dorsal striatum (Haber et al., 2000), suggesting that the dorsal striatum may receive both motivational value and salience coding DA signals (Figure 7A). Motivational value coding DA neurons would provide an ideal instructive signal for striatal circuitry involved in value learning, such as learning of stimulus-response habits (Faure et al., 2005; Yin and Knowlton, 2006; Balleine and O'Doherty, 2010). When these DA neurons burst, they would engage the direct pathway to learn to gain reward outcomes; when they pause, they would engage the indirect pathway to learn to avoid aversive outcomes (Figure 2). Indeed, there is recent evidence that the striatal pathways follow exactly this division of labor for reward and aversive processing (Hikida et al., 2010). It is still unknown, however, how neurons in these pathways respond to rewarding and aversive events during behavior. At least in the dorsal striatum as a whole, a subset of neurons respond to certain rewarding and aversive events in distinct manners (Ravel et al., 2003; Yamada et al., 2004, 2007; Joshua et al., 2008).

Destinations of motivational salience signals

According to our hypothesis, motivational salience coding DA neurons should project to brain regions involved in orienting, cognitive processing, and general motivation (Figure 5). Indeed, DA neurons in the dorsolateral midbrain send projections to dorsal and lateral frontal cortex (Williams and Goldman-Rakic, 1998) (Figure 7A), a region which has been implicated in cognitive functions such as attentional search, working memory, cognitive control, and decision making between motivational outcomes (Williams and Castner, 2006; Lee and Seo, 2007; Wise, 2008; Kable and Glimcher, 2009; Wallis and Kennerley, 2010). Dorsolateral prefrontal cognitive functions are tightly regulated by DA levels (Robbins and Arnsten, 2009) and are theorized to depend on phasic DA neuron activation (Cohen et al., 2002; Lapish et al., 2007). Notably, a subset of lateral prefrontal neurons respond to both rewarding and aversive visual cues, and the great majority respond in the same direction resembling coding of motivational salience (Kobayashi et al., 2006). Furthermore, the activity of these neurons is correlated with behavioral success at performing working memory tasks (Kobayashi et al., 2006). Although this dorsolateral DA→dorsolateral frontal cortex pathway appears to be specific to primates (Williams and Goldman-Rakic, 1998), a functionally similar pathway may exist in other species. In particular, many of the cognitive functions of the primate dorsolateral prefrontal cortex are performed by the rodent medial prefrontal cortex (Uylings et al., 2003), and there is evidence that this region receives DA motivational salience signals and controls salience-related behavior (Mantz et al., 1989; Di Chiara, 2002; Joseph et al., 2003; Ventura et al., 2007; Ventura et al., 2008).

Given the evidence that the VTA contains both salience and value coding neurons and that value coding signals are sent to the NAc shell, salience signals might be sent to the NAc core (Figure 7A). Indeed, the NAc core (but not shell) is crucial for enabling motivation to overcome response costs such as physical effort; for performance of set-shifting tasks requiring cognitive flexibility; and for enabling reward cues to cause an enhancement of general motivation (Ghods-Sharifi and Floresco, 2010; Floresco et al., 2006; Hall et al., 2001; Cardinal, 2006). Consistent with coding of motivational salience, the NAc core receives phasic bursts of DA during both rewarding experiences (Day et al., 2007) and aversive experiences (Anstrom et al., 2009).

Finally, as discussed above, some salience coding DA neurons may project to the dorsal striatum (Figure 7A). While some regions of the dorsal striatum are involved in functions related to learning action values, the dorsal striatum is also involved in functions that should be engaged for all salient events, such as orienting, attention, working memory, and general motivation (Hikosaka et al., 2000; Klingberg, 2010; Palmiter, 2008). Indeed, a subset of dorsal striatal neurons are more strongly responsive to rewarding and aversive events than to neutral events (Ravel et al., 1999; Blazquez et al., 2002; Yamada et al., 2004, 2007), although their causal role in motivated behavior is not yet known.

Sources of motivational value signals

A recent series of studies suggests that DA neurons receive motivational value signals from a small nucleus in the epithalamus, the lateral habenula (LHb) (Hikosaka, 2010) (Figure 8). The LHb exerts potent negative control over DA neurons: LHb stimulation inhibits DA neurons at short latencies (Christoph et al., 1986) and can regulate learning in an opposite manner to VTA stimulation (Shumake et al., 2010). Consistent with a negative control signal, many LHb neurons have mirror-inverted phasic responses to DA neurons: LHb neurons are inhibited by positive reward prediction errors and excited by negative reward prediction errors (Matsumoto and Hikosaka, 2007, 2009a; Bromberg-Martin et al., 2010a; Bromberg-Martin et al., 2010c). In several cases these signals occur at shorter latencies in the LHb, consistent with LHb → DA transmission (Matsumoto and Hikosaka, 2007; Bromberg-Martin et al., 2010a).

Figure 8. Hypothesized sources of motivational value, salience, and alerting signals.

In our hypothesis, motivational salience signals are sent to DA neurons through the central amygdala (CeA). Motivational value and alerting signals may be sent to DA neurons through a pathway including the globus pallidus border (GPb), lateral habenula (LHb), and rostromedial tegmental nucleus (RMTg). Value signals related to aversive outcomes may also be sent by the parabrachial nucleus (PBN), while alerting signals may also be sent by the superior colliculus (SC) and pedunculopontine tegmental nucleus (PPTg).

The LHb is capable of controlling DA neurons throughout the midbrain, but several lines of evidence suggest that it exerts preferential control over motivational value coding DA neurons. First, LHb neurons encode motivational value in a manner closely mirroring value-coding DA neurons – they encode both positive and negative reward prediction errors and respond in opposite directions to rewarding and aversive events (Matsumoto and Hikosaka, 2009a; Bromberg-Martin et al., 2010a). Second, LHb stimulation has its most potent effects on DA neurons whose properties are consistent with value coding, including inhibition by no-reward cues and anatomical location in the ventromedial SNc (Matsumoto and Hikosaka, 2007, 2009b). Third, lesions to the LHb impair DA neuron inhibitory responses to aversive events, suggesting a causal role for the LHb in generating DA value signals (Gao et al., 1990).

The LHb is part of a more extensive neural pathway by which DA neurons can be controlled by the basal ganglia (Figure 8). The LHb receives signals resembling reward prediction errors through a projection from a population of neurons located around the globus pallidus border (GPb) (Hong and Hikosaka, 2008). Once these signals reach the LHb they are likely to be sent to DA neurons through a disynaptic pathway in which the LHb excites midbrain GABA neurons that in turn inhibit DA neurons (Ji and Shepard, 2007; Omelchenko et al., 2009; Brinschwitz et al., 2010). This could occur through LHb projections to interneurons in the VTA and to an adjacent GABA-ergic nucleus called the rostromedial tegmental nucleus (RMTg) (Jhou et al., 2009b) (also called the ‘caudal tail of VTA’ (Kaufling et al., 2009)). Notably, RMTg neurons have response properties similar to LHb neurons, encode motivational value, and have a heavy inhibitory projection to dopaminergic midbrain (Jhou et al., 2009a). Thus, the complete basal ganglia pathway to send motivational value signals to DA neurons may be GPb→LHb→RMTg→DA (Hikosaka, 2010).

An important question for future research is whether motivational value signals are channeled solely through the LHb or whether they are carried by multiple input pathways. Notably, DA inhibitions by aversive footshocks are controlled by activity in the mesopontine parabrachial nucleus (PBN) (Coizet et al., 2010) (Figure 8). This nucleus contains neurons that receive direct input from the spinal cord encoding noxious sensations and could inhibit DA neurons through excitatory projections to the RMTg (Coizet et al., 2010; Gauriau and Bernard, 2002). This suggests that the LHb sends DA neurons motivational value signals for both rewarding and aversive cues and outcomes while the PBN provides a component of the value signal specifically related to aversive outcomes.

Sources of motivational salience signals

Less is known about the source of motivational salience signals in DA neurons. One intriguing candidate is the central nucleus of the amygdala (CeA) which has been consistently implicated in orienting, attention, and general motivational responses during both rewarding and aversive events (Holland and Gallagher, 1999; Baxter and Murray, 2002; Merali et al., 2003; Balleine and Killcross, 2006) (Figure 8). The CeA and other amygdala nuclei contain many neurons whose signals are consistent with motivational salience: they signal rewarding and aversive events in the same direction, are enhanced when events occur unexpectedly, and are correlated with behavioral measures of arousal (Nishijo et al., 1988; Belova et al., 2007; Shabel and Janak, 2009). These signals may be sent to DA neurons because the CeA has descending projections to the brainstem that carry rewarding and aversive information (Lee et al., 2005; Pascoe and Kapp, 1985) and the CeA is necessary for DA release during reward-related events (Phillips et al., 2003a). Furthermore, the CeA participates with DA neurons in pathways consistent with our proposed anatomical and functional networks for motivational salience. A pathway including the CeA, SNc, and dorsal striatum is necessary for learned orienting to food cues (Han et al., 1997; Lee et al., 2005; El-Amamy and Holland, 2007). Consistent with our division of salience vs. value signals, this pathway is needed for learning to orient to food cues but not for learning to approach food outcomes (Han et al., 1997). A second pathway, including the CeA, SNc, VTA, and NAc core, is necessary for reward cues to cause an increase in general motivation to perform reward-seeking actions (Hall et al., 2001; Corbit and Balleine, 2005; El-Amamy and Holland, 2007).

In addition to the CeA, DA neurons could receive motivational salience signals from other sources such as salience-coding neurons in the basal forebrain (Lin and Nicolelis, 2008; Richardson and DeLong, 1991) and neurons in the PBN (Coizet et al., 2010), although these pathways remain to be investigated.

Sources of alerting signals

There are several good candidates for providing DA neurons with alerting signals. Perhaps the most attractive candidate is the superior colliculus (SC), a midbrain nucleus that receives short-latency sensory input from multiple sensory modalities and controls orienting reactions and attention (Redgrave and Gurney, 2006) (Figure 8). The SC has a direct projection to the SNc and VTA (May et al., 2009; Comoli et al., 2003). In anesthetized animals the SC is a vital conduit for short-latency visual signals to reach DA neurons and trigger DA release in downstream structures (Comoli et al., 2003; Dommett et al., 2005). The SC-DA pathway is best suited to convey alerting signals rather than reward and aversion signals, as SC neurons have little response to reward delivery and have only a mild influence over DA aversive responses (Coizet et al., 2006). This suggests a sequence of events in which SC neurons (1) detect a stimulus, (2) select it as potentially important, (3) trigger an orienting reaction to examine the stimulus, and (4) simultaneously trigger a DA alerting response which causes a burst of DA in downstream structures (Redgrave and Gurney, 2006).

A second candidate for sending alerting signals to DA neurons is the LHb (Figure 8). Notably, the unexpected onset of a trial start cue inhibits many LHb neurons in an inverse manner to the DA neuron alerting signal, and this response occurs at shorter latency in the LHb consistent with a LHb→DA direction of transmission (Bromberg-Martin et al., 2010a; Bromberg-Martin et al., 2010c). We have also anecdotally observed that LHb neurons are commonly inhibited by unexpected visual images and sounds in an inverse manner to DA excitations (M.M., E.S.B.-M., and O.H., unpublished observations) although this awaits a more systematic investigation.

Finally, a third candidate for sending alerting signals to DA neurons is the pedunculopontine tegmental nucleus (PPTg), which projects to both the SNc and VTA and is involved in motivational processing (Winn, 2006) (Figure 8). The PPTg is important for enabling VTA DA neuron bursts (Grace et al., 2007) including burst responses to reward cues (Pan and Hyland, 2005). Consistent with an alerting signal, PPTg neurons have short-latency responses to multiple sensory modalities and are active during orienting reactions (Winn, 2006). There is evidence that PPTg sensory responses are influenced by reward value and by requirements for immediate action (Dormont et al., 1998; Okada et al., 2009) (but see (Pan and Hyland, 2005)). Some PPTg neurons also respond to rewarding or aversive outcomes themselves (Dormont et al., 1998; Kobayashi et al., 2002; Ivlieva and Timofeeva, 2003b, a). It will be important to test whether the signals the PPTg sends to DA neurons are related specifically to alerting or whether they contain other motivational signals such as value and salience.

Directions for future research

We have reviewed the nature of reward, aversive, and alerting signals in DA neurons, and have proposed a hypothesis about the underlying neural pathways and their roles in motivated behavior. We consider this to be a working hypothesis, a guide for future theories and research that will bring us to a more complete understanding. Here we will highlight several areas where further investigation is needed to reveal deeper complexities.

At the present time, our understanding of the neural pathways underlying DA signals is at an early stage. Therefore, we have attempted to infer the sources and destinations of value and salience coding DA signals largely based on indirect measures such as the neural response properties and functional roles of different brain areas. It will be important to put these candidate pathways to a direct test and to discover their detailed properties, aided by recently developed tools that allow DA transmission to be monitored (Robinson et al., 2008) and controlled (Tsai et al., 2009; Tecuapetla et al., 2010; Stuber et al., 2010) with high spatial and temporal precision. As noted above, several of these candidate structures have a topographic organization, suggesting that their communication with DA neurons might be topographic as well. The neural sources of phasic DA signals may also be more complex than the simple feedforward pathways we have proposed, since the neural structures that communicate with DA neurons are densely interconnected (Geisler and Zahm, 2005) and DA neurons can communicate with each other within the midbrain (Ford et al., 2010).

We have focused on a selected set of DA neuron connections, but DA neurons receive functional input from many additional structures including the subthalamic nucleus, laterodorsal tegmental nucleus, bed nucleus of the stria terminalis, prefrontal cortex, ventral pallidum, and lateral hypothalamus (Grace et al., 2007; Shimo and Wichmann, 2009; Jalabert et al., 2009). Notably, lateral hypothalamus orexin neurons project to DA neurons, are activated by rewarding rather than aversive events, and trigger drug-seeking behavior (Harris and Aston-Jones, 2006), suggesting a possible role in value-related functions. DA neurons also send projections to many additional structures including the hypothalamus, hippocampus, amygdala, habenula, and a great many cortical areas. Notably, the anterior cingulate cortex (ACC) has been proposed to receive reward prediction error signals from DA neurons (Holroyd and Coles, 2002) and contains neurons with activity positively related to motivational value (Koyama et al., 1998). Yet ACC activation is also linked to aversive processing (Vogt, 2005; Johansen and Fields, 2004). These ACC functions might be supported by a mixture of DA motivational value and salience signals, which will be important to test in future study. Indeed, neural signals related to reward prediction errors have been reported in several areas including the medial prefrontal cortex (Matsumoto et al., 2007; Seo and Lee, 2007), orbitofrontal cortex (Sul et al., 2010) (but see (Takahashi et al., 2009; Kennerley and Wallis, 2009)), and dorsal striatum (Kim et al., 2009; Oyama et al., 2010), and their causal relationship to DA neuron activity remains to be discovered.

We have described motivational events with a simple dichotomy, classifying them as ‘rewarding’ or ‘aversive’. Yet these categories contain great variety. An aversive illness is gradual, prolonged, and caused by internal events; an aversive airpuff is fast, brief, and caused by the external world. These situations demand very different behavioral responses which are likely to be supported by different neural systems. Furthermore, although we have focused our discussion on two types of DA neurons with signals resembling motivational value and salience, a close examination shows that DA neurons are not limited to this strict dichotomy. As indicated by our notion of an anatomical gradient some DA neurons transmit mixtures of both salience-like and value-like signals; still other DA neurons respond to rewarding but not aversive events (Matsumoto and Hikosaka, 2009b; Bromberg-Martin et al., 2010a). These considerations suggest that some DA neurons may not encode motivational events along our intuitive axis of ‘good’ vs. ‘bad’ and may instead be specialized to support specific forms of adaptive behavior.

Even in the realm of rewards, there is evidence that DA neurons transmit different reward signals to different brain regions (Bassareo and Di Chiara, 1999; Ito et al., 2000; Stefani and Moghaddam, 2006; Wightman et al., 2007; Aragona et al., 2009). Diverse responses reported in the SNc and VTA include neurons that: respond only to the start of a trial (Roesch et al., 2007), perhaps encoding a pure alerting signal; respond differently to visual and auditory modalities (Strecker and Jacobs, 1985), perhaps receiving input from different SC and PPTg neurons; respond to the first or last event in a sequence (Ravel and Richmond, 2006; Jin and Costa, 2010); have sustained activation by risky rewards (Fiorillo et al., 2003); or are activated during body movements (Schultz, 1986; Kiyatkin, 1988a; Puryear et al., 2010; Jin and Costa, 2010) (see also (Phillips et al., 2003b; Stuber et al., 2005)). While each of these response patterns has only been reported in a minority of studies or neurons, this data suggests that DA neurons could potentially be divided into a much larger number of functionally distinct populations.

A final and important consideration is that present recording studies in behaving animals do not yet provide fully conclusive measurements of DA neuron activity, because these studies have only been able to distinguish between DA and non-DA neurons using indirect methods, based on neural properties such as firing rate, spike waveform, and sensitivity to D2 receptor agonists (Grace and Bunney, 1983; Schultz, 1986). These techniques appear to identify DA neurons reliably within the SNc, indicated by several lines of evidence including comparison of intracellular and extracellular methods, juxtacellular recordings, and the effects of DA-specific lesions (Grace and Bunney, 1983; Grace et al., 2007; Brown et al., 2009). However, recent studies indicate that this technique may be less reliable in the VTA, where DA and non-DA neurons have a wider variety of cellular properties (Margolis et al., 2006; Margolis et al., 2008; Lammel et al., 2008; Brischoux et al., 2009). Even direct measurements of DA concentrations in downstream structures do not provide conclusive evidence of DA neuron spiking activity, because DA concentrations may be controlled by additional factors such as glutamatergic activation of DA axon terminals (Cheramy et al., 1991) and rapid changes in the activity of DA transporters (Zahniser and Sorkin, 2004). To perform fully conclusive measurements of DA neuron activity during active behavior it will be necessary to use new recording techniques, such as combining extracellular recording with optogenetic stimulation (Jin and Costa, 2010).

Conclusion

An influential concept of midbrain DA neurons has been that they transmit a uniform motivational signal to all downstream structures. Here we have reviewed evidence that DA signals are more diverse than commonly thought. Rather than encoding a uniform signal, DA neurons come in multiple types that send distinct motivational messages about rewarding and non-rewarding events. Even single DA neurons do not appear to transmit single motivational signals. Instead, DA neurons transmit mixtures of multiple signals generated by distinct neural processes. Some reflect detailed predictions about rewarding and aversive experiences, while others reflect fast responses to events of high potential importance.

In addition, we have proposed a hypothesis about the nature of these diverse DA signals, the neural networks that generate them, and their influence on downstream brain structures and on motivated behavior. Our proposal can be seen as a synthesis of previous theories. Many previous theories have attempted to identify DA neurons with a single motivational process such as seeking of valued goals, engaging motivationally salient situations, or reacting to alerting changes in the environment. In our view, DA neurons receive signals related to all three of these processes. Yet rather than distilling these signals into a uniform message, we have proposed that DA neurons transmit these signals to distinct brain structures in order to support distinct neural systems for motivated cognition and behavior. Some DA neurons support brain systems that assign motivational value, promoting actions to seek rewarding events, avoid aversive events, and ensure that alerting events can be predicted and prepared for in advance. Other DA neurons support brain systems that are engaged by motivational salience, including orienting to detect potentially important events, cognitive processing to choose a response and to remember its consequences, and motivation to persist in pursuit of an optimal outcome. We hope that this proposal helps lead us to a more refined understanding of DA functions in the brain, in which DA neurons tailor their signals to support multiple neural networks with distinct roles in motivational control.

ACKNOWLEDGEMENTS

This work was supported by the intramural research program at the National Eye Institute. We also thank Amy Arnsten for valuable discussions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

FOOTNOTE1

By motivational salience we mean a quantity that is high for both rewarding and aversive events and is low for motivationally neutral (non-rewarding and non-aversive) events. This is similar to the definition given by (Berridge and Robinson, 1998). Note that motivational salience is distinct from other notions of salience used in neuroscience, such as incentive salience (which applies only to desirable events; (Berridge and Robinson, 1998)) and perceptual salience (which applies to motivationally neutral events such as moving objects and colored lights; (Bisley and Goldberg, 2010)).]

FOOTNOTE2

Note that motivational salience coding DA neuron signals are distinct from the classic notions of “associability” and “change in associability” that have been proposed to regulate the rate of reinforcement learning (e.g. (Pearce and Hall, 1980)). Such theories state that animals learn (and adjust learning rates) from both positive and negative prediction errors. Although these DA neurons may contribute to learning from positive prediction errors, during which they can have a strong response (e.g. to unexpected reward delivery), they may not contribute to learning from negative prediction errors, during which they can have little or no response (e.g. to unexpected reward omission) (Fig. 4B).

REFERENCES

- Ahlbrecht M, Weber M. The resolution of uncertainty: an experimental study. Journal of institutional and theoretical economics. 1996;152:593–607. [Google Scholar]

- Albin RL, Young AB, Penney JB. The functional anatomy of basal ganglia disorders. Trends in neurosciences. 1989;12:366–375. doi: 10.1016/0166-2236(89)90074-x. [DOI] [PubMed] [Google Scholar]